New York Times R&D Team Launches a Pragmatic Use Case for CAI Technology in Journalism

Building off their previous work in the News Provenance Project, the New York Times R&D team has partnered with the CAI on a prototype exploring tools to give readers transparency into the source and veracity of news visuals.

Popular wisdom used to tell us that “a photograph never lies” and that we should trust in the still image. Nowadays, soaked in information, much of it visual, it’s become much more complicated to distinguish fact from fiction, whether from family and friends or professional media.

In addition, we sometimes receive doctored images, conspiracy theories, and fake news from outlets disguised as news organizations; content produced to further an agenda or simply to make money from advertising, should it go viral.

Regardless of source, images are plucked out of the traditional and social media streams, quickly screen-grabbed, sometimes altered, posted and reposted extensively online, usually without payment or acknowledgement and often lacking the original contextual information that might help us identify the source, frame our interpretation. and add to our understanding.

So how are we to make sense of this complex and sometimes confusing visual ecosystem?

“The more people are able to understand the true origin of their media, the less room there is for ‘fake news’ and other deceitful information. Allowing everyone to provide and access media origins will protect against manipulated, deceptive, or out-of-context online media.”

— Scott Lowenstein, NYT R&D

At the Content Authenticity Initiative (CAI), a community of major technology and media companies, we’re working to develop an open industry standard that will allow for more confidence in the authenticity of photographs (and then video and other file types). We are creating a community of trust, to help viewers know if they can believe what they see.

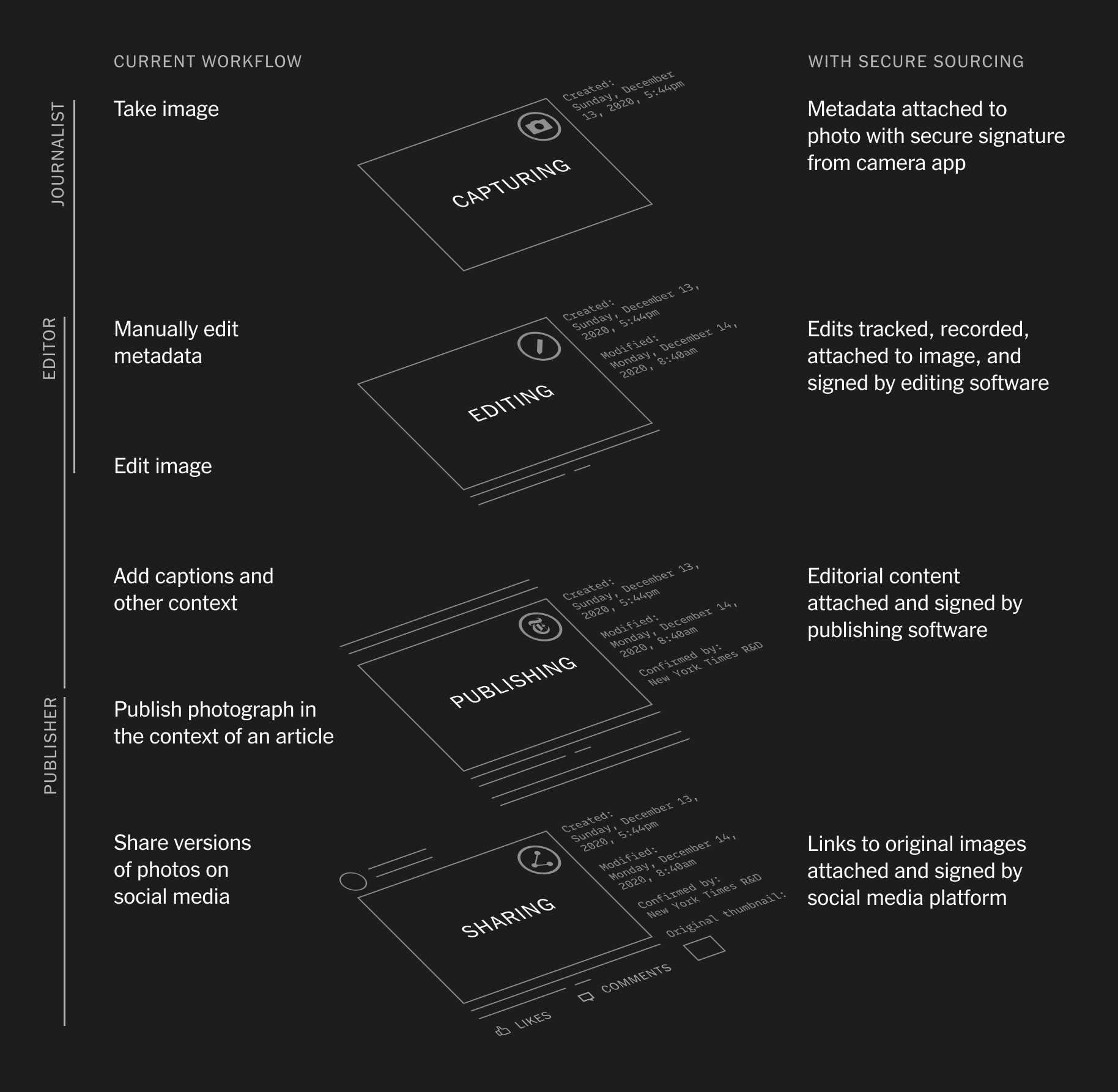

The New York Times Co., a founding member of the CAI (along with Adobe and Twitter) today unveiled a prototype of this system of end-to-end secure capture, edit, and publish.

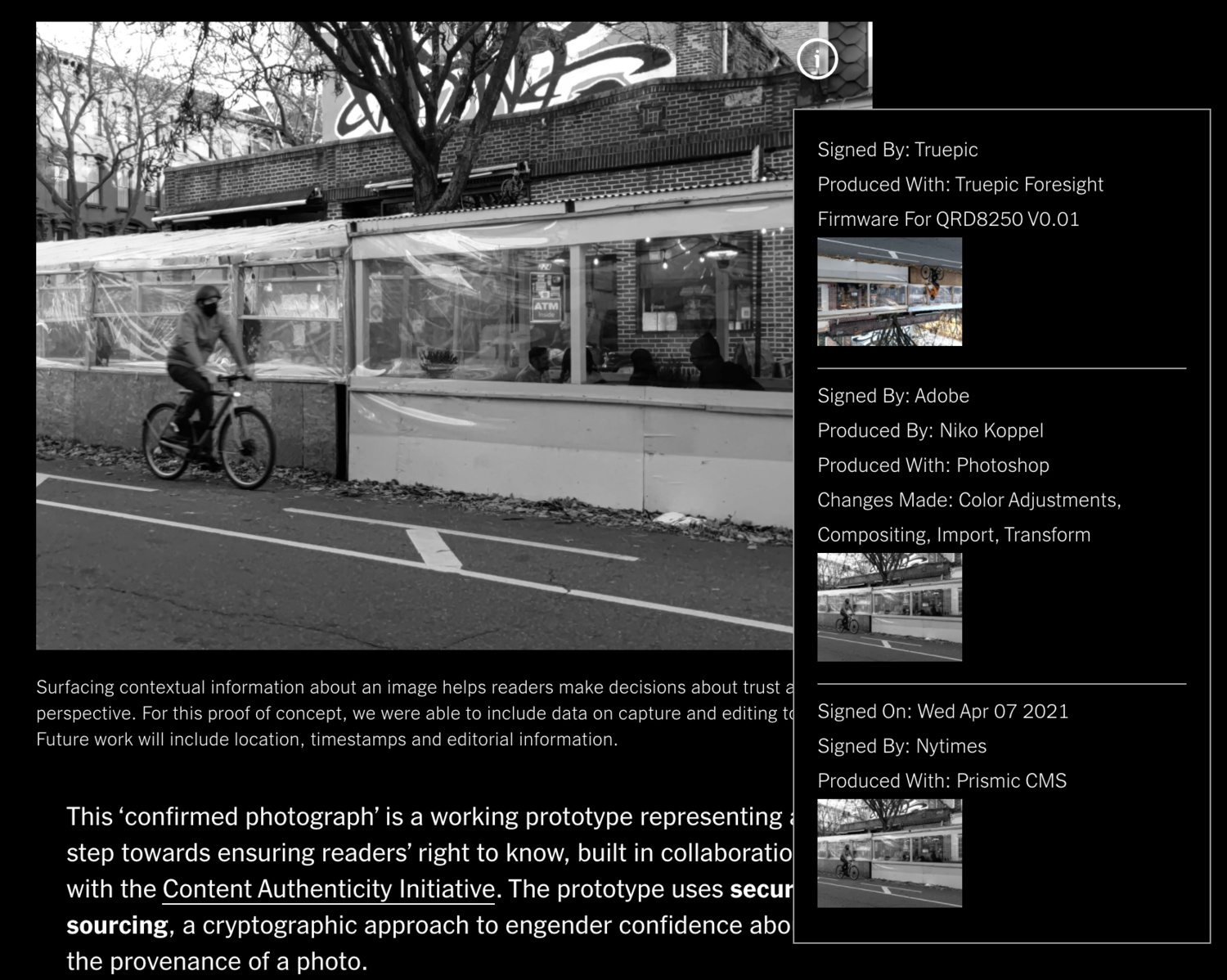

This example on the NYT R&D site shows what they call “secure sourcing,” using a Qualcomm/Truepic test device with secure capture, editing via Photoshop, and publishing via their Prismic content management system.

This important work demonstrates how a well-respected news outlet like the NYT is experimenting with CAI technology, giving us a hint of what’s possible at scale. This aligns with our goal of displaying a CAI logo next to images published in traditional or social media that gives the consumer more information about the provenance of the imagery, such as where and when it was first created and how it might have been altered or edited.

See an example of these important metadata displayed below on the R&D site, on the info panel at right.

The NYT R&D work is a further iteration of the technology that we detailed in our first case study here. Using the same Qualcomm/Truepic test device as photographer Sara Lewkowicz did for us in November 2020 with secure camera capture, the NY Times R&D team created images with their own provenance data. With early access to the CAI software developer kit, they were able to securely display provenance immutability sealed to their images, that also reflect edits made in the prerelease version of Photoshop.

Seeing this secure system in place in a prototype from the New York Times Co. is a major step forward in the work we’ve forged with our community of major media and technology companies, NGOs, academics, and others working to promote and drive adoption of an open industry standard around content authenticity and provenance.

Having spent 10 years as a war photographer, followed by 13 years as director and VP of photography for the Associated Press, I see urgent need for adoption of this open industry standard around content authenticity and provenance.

This will bolster trust in content among both consumers and capture partners (such as Qualcomm and Truepic), editing partners (in this case, our colleagues at Adobe Photoshop), and publishers, such as the New York Times and others.

As the 78 Days project from Starling Lab and Reuters initially showed, we now have another pragmatic use case and proof of concept of our work.