How a voice cloning marketplace is using Content Credentials to fight misuse

Taylor Swift isn’t selling Le Creuset cookware. However, deepfake advertisements recently appeared that used video clips of the pop star and synthesized versions of her voice to look and sound as if she were doing a giveaway offer. Unsuspecting fans and others who trusted Swift’s brand clicked the ads and entered their personal and credit card information for a chance to win.

Public figures are prime targets for scams that are being created and scaled with help from voice cloning and video deepfake tools.

In July 2023, a video of Lord of the Rings star Elijah Wood appeared on social media. Originally created with Cameo, an app that lets people purchase personalized video messages from celebrities, the clip featured Wood sharing words of encouragement with someone named Vladimir who was struggling with substance abuse. “I hope you get the help that you need,” the actor said.

Soon after, it emerged that the heavily edited video was part of a Russian disinformation campaign that sought to insinuate that Ukrainian President Volodymyr Zelensky had a drug and alcohol problem. At least six other celebrities, including boxer Mike Tyson and Scrubs actor John McGinley, unwittingly became part of the scheme. Despite clumsy editing, the videos were shared widely on social platforms.

Now, imagine if the level of effort required to create these videos dropped to zero. Instead of commissioning content on Cameo, one could choose a celebrity or obtain enough video content of an individual and use AI to make them say or do anything.

This challenge is intensifying with the spread of AI-enabled audio and voice cloning technology that can produce convincing deepfakes. Governments have started taking steps to protect the public; for example, the US Federal Trade Commission issued a consumer warning about scams enabled by voice cloning and announced a prize for a solution to address the threat. Both the Biden administration and the European Union have called for clear labeling of AI-generated content.

These scams and non-consensual imagery, which range from fake product endorsements to geopolitical campaigns, are increasingly targeting people who aren’t famous singers or politicians.

A commitment to verifiable AI-generated audio

Dmytro Bielievstov knows that top-down regulation isn’t enough. In 2017, along with Alex Serdiuk and Grant Reaber, the Ukraine-based software engineer co-founded Respeecher, a tool that allows anyone to speak in another person's voice using AI. “If someone uses our platform to pretend to be someone they are not, such as a fake news channel with synthetic characters, it might be challenging for the audience to catch that because of how realistic our synthetic voices are,” he said.

Keenly aware of the potential for misuse, the Respeecher team has embraced the use of Content Credentials through the Content Authenticity Initiative’s (CAI) open-source tools. Content Credentials are based on the C2PA open standard to verify the source and history of digital content. With an estimated 4 billion people around the world heading to the polls in over 50 elections this year, there’s an urgent need to ensure clarity about the origins and history of the visual stories and media we experience online. And there’s momentum for C2PA adoption across industries and disciplines.

We recently spoke with Dmytro about how Respeecher implemented Content Credentials, his tips for getting started, and why provenance is critical for building trust into the digital ecosystem.

This interview has been edited for length and clarity.

How would you describe Respeecher?

Respeecher lets one person perform in the voice of another. At the heart of our product is a collection of high-quality voice conversion algorithms. We don't use text as input; instead, we utilize speech. This enables individuals to not just speak but also perform with various vocal styles, including nonverbal and emotional expressions. For example, we enable actors to embody different vocal masks, similar to how they can put on makeup.

Who are you looking to reach with the Voice Marketplace?

Initially, our focus was on the film and TV industry. We’ve applied our technology in big studio projects, such as Lucasfilm’s limited series “Obi-Wan Kenobi,” where we synthesized James Earl Jones’ voice for Darth Vader.

Now, we’re launching our Voice Marketplace, which democratizes the technology we’ve used in Hollywood for everyone. The platform allows individuals to speak using any voice from our library. This will enable small creators to develop games, streams, and other content, including amateur productions. While we’ll maintain oversight of content moderation and voice ownership, we’ll now have less control over the content’s destination and usage.

What motivated you to join the Content Authenticity Initiative?

First, the CAI aids in preventing misinformation. We do not allow user-defined voices (say President Biden’s), as controlling that would be quite difficult. Still, users should know that the content they’re consuming involves synthetic speech. As a leader in this space, we want to ensure that all generated content from our marketplace has Content Credentials. In the future, as all browsers and content distribution platforms support data provenance, it will be easy for consumers to verify how the audio in a video was produced. If something lacks cryptographic Content Credentials, it will automatically raise suspicion about authenticity.

Secondly, the initiative addresses the needs of content creators. By embedding credentials in the audio they produce, they can make it clear that the work is their own artistic creation.

How do Content Credentials work in Respeecher?

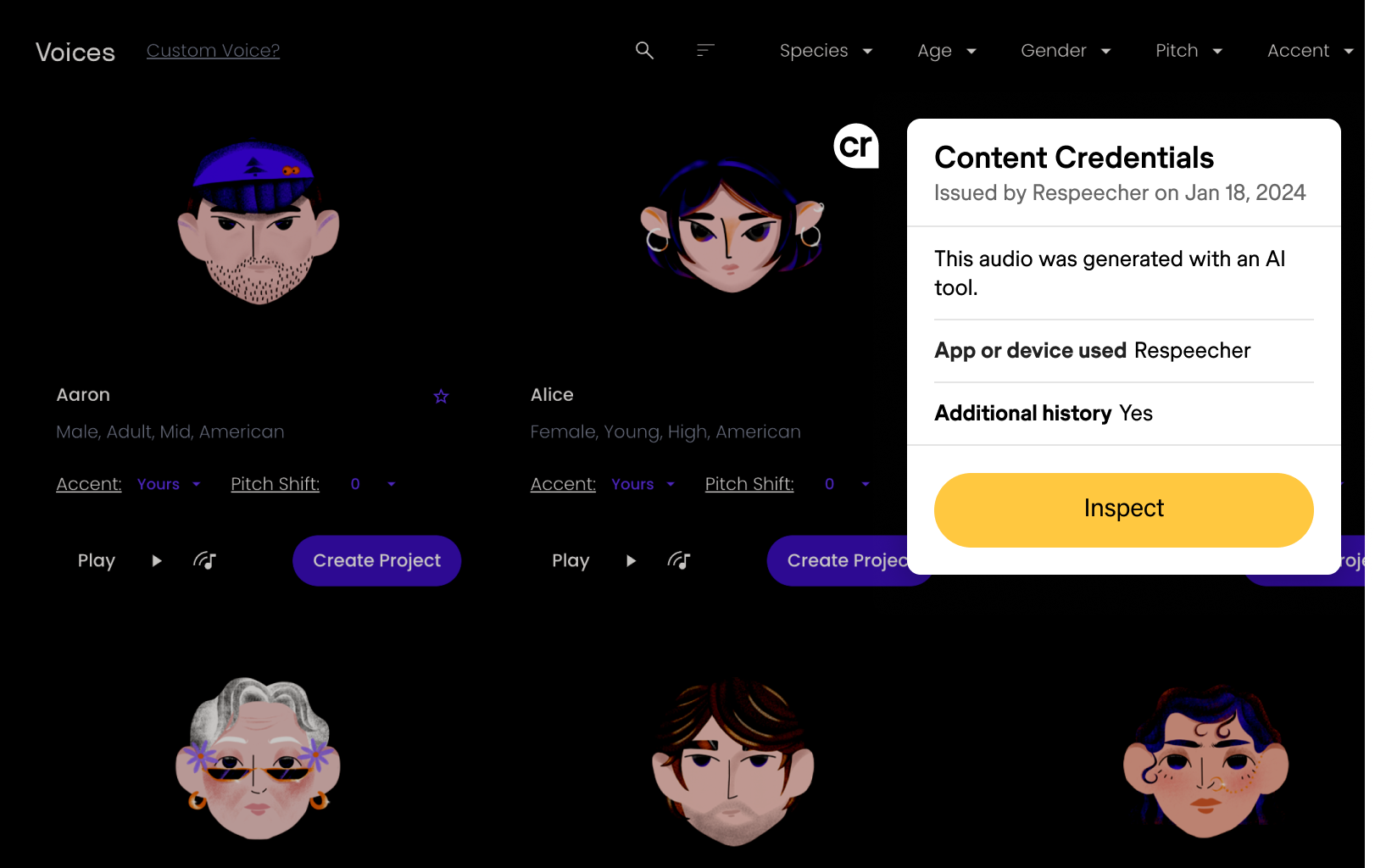

We have integrated CAI's open-source C2PA tool into our marketplace. Whenever synthetic audio is rendered on our servers, it is automatically cryptographically signed as being a product of the Respeecher marketplace. When clients download the audio, it contains metadata with Content Credentials stating that it was converted into a different voice by Respeecher. GlobalSign, our third-party certificate authority, signs our credentials with its cryptographic key so that anyone who receives our content can automatically verify that it was signed by us and not an impersonator.

The metadata isn't a watermark but rather a side component of the file. If the metadata is removed, consumers are alerted that the file's source is uncertain. If the file is modified after it’s downloaded (say someone changes the name of the target voice), the cryptographic signature won't match the file's contents. So it’s impossible to manipulate the metadata without invalidating Respeecher’s signature.

All voice cloned audio files downloaded from Respeecher Marketplace includes Content Credentials for AI transparency.

What challenges did you encounter while implementing Content Credentials technology?

Surprisingly, the biggest challenge was obtaining keys from our certificate authority. We had to prove that we were a legitimate organization, which took several weeks and some back and forth with GlobalSign.

Another challenge was that cryptography, particularly public key infrastructure (PKI), can be challenging to grasp for someone who isn’t an expert. Our team had to understand the specifics of C2PA, figure out appropriate configurations, and determine whether we needed a third-party certificate authority. These nuances required time and effort, especially since we don’t have a cryptographic expert on our team. However, the CAI team and community were incredibly helpful in assisting us with these challenges.

What advice do you have for other developers getting started with Content Credentials and the CAI’s open-source tools?

Start with CAI’s Getting Started guide, but then dedicate time to read the C2PA specification document. Although it is somewhat long and intimidating, it’s surprisingly comprehensible for non-experts.

Also, utilize ChatGPT to help explain complex concepts to you. Even though ChatGPT doesn’t know the technical details of C2PA (because its current version has limited access to information beyond certain dates), it still does a great job explaining concepts such as PKI and cryptographic signatures.

What's next for you when it comes to advances in AI safety and Content Credentials?

We’re planning to give users of our marketplace the option to add their own authorship credentials to the Content Credentials. Currently, the metadata indicates that the audio was modified by Respeecher, but it doesn't attribute the audio to its creator. Some users may prefer to remain anonymous, but others will choose to include their credentials.

Respeecher will continue to be at the forefront of initiatives to adopt data provenance standards across the AI synthetic media industry. It’s essential for companies to create a unified authentication layer for audio and video content, and content distribution platforms like YouTube and news websites have a crucial role to play in embracing this technology. Just as a lack of HTTPS warns users of potential security issues, a similar mechanism could alert users to the source of an audio file, enhancing transparency and authenticity.

We are also closely watching how the concept of cloud-hosted metadata develops. Embedding hard-to-remove watermarks in audio and video signals without making them obvious remains a largely unsolved problem. By storing metadata in the cloud and enabling checks for pre-signed content, we can potentially simplify authentication and the synthetic media detection problem.