October 2025 | This Month in Generative AI: Supercharged Frauds and Scams

In January 2024, I wrote my first entry for this series, Frauds and Scams, where I discussed how deepfakes were being used to perpetrate various types of scams on individuals and institutions. In the intervening 21 months, two primary things have happened: (1) the deepfake technology has improved significantly in terms of realism and accessibility and (2) domestic and international cybercriminals have significantly ramped up the weaponization of deepfakes.

Deepfakes

Generative AI — which can generate images and videos from nothing more than a text prompt — continues its ballistic trajectory in terms of hyper photorealism and accessibility.

With Google's release of Veo 3 earlier this year and OpenAI's recent release of Sora 2, full-blown audio/video synthesis has taken a giant leap on its path through the uncanny valley.

Sora 2 also added a new feature that allows you to create a digital likeness of your face and voice — a so-called cameo (a smart rebranding from deepfake). After a short onboarding process in which you record your voice and face, you can insert your digital likeness into any video with a descriptive prompt. You can also share your likeness with others who can then also use your likeness in videos.

Above, for example, is a cameo I made with the prompt: "@hfarid4 in a hot air balloon over the pyramids of Egypt explaining what an eigenvector is." My likeness is not perfect, but I was impressed that Sora could produce a correct and concise description of the mathematical concept of an eigenvector. I am now wondering how long it would be before I could create entire video lectures using my avatar and simple text prompts, all the while touring the seven wonders of the world.

OpenAI's cameo is surely just the beginning of the agentic AI revolution in which our identity and likeness will be used with (and, almost certainly, without) our permission. The ability to capture a person's identity is part of what is fueling the rise in AI-powered scams and frauds.

Scams and frauds

According to the FBI, more than 4.2 million fraud reports have been filed since 2020, resulting in over $50.5 billion in losses, with a growing portion stemming from deepfake scams. “The FBI continues to see a troubling rise in fraud reports involving deepfake media,” said FBI Criminal Investigative Division Assistant Director Jose Perez. “Educating the public about this emerging threat is key to preventing these scams and minimizing their impact. We encourage consumers to stay informed and share what they learn with friends and family so they can spot deepfakes before they do any harm.”

Today, we are seeing deepfake scams taking many forms with diverse victims ranging from grandparents to children, executives, and members of the US government.

Phishing: With as little as a 15-second sample of someone's voice it is possible to digitally clone their voice and then generate audio samples of that person saying anything their creator wants them to say. Any voice can then be weaponized against family and loved ones. In early 2023, for example, the mother of a teenager received a phone call from what sounded like her distressed daughter claiming that the teenager had been kidnapped and feared for her life. The scammer demanded $50,000 to spare the child's life. After calling her husband in a panic, she learned that their daughter was safe at home.

Romance scams: In a romance scam, a scammer creates a fake online identity to build a romantic relationship with a victim and ultimately persuade that person to send money. The scammer often uses tales of hardship to elicit sympathy. Although this type of scam is not new, scammers can adopt any persona and engage with their victims over video calls, adding a new level of sophistication and efficacy to these scams.

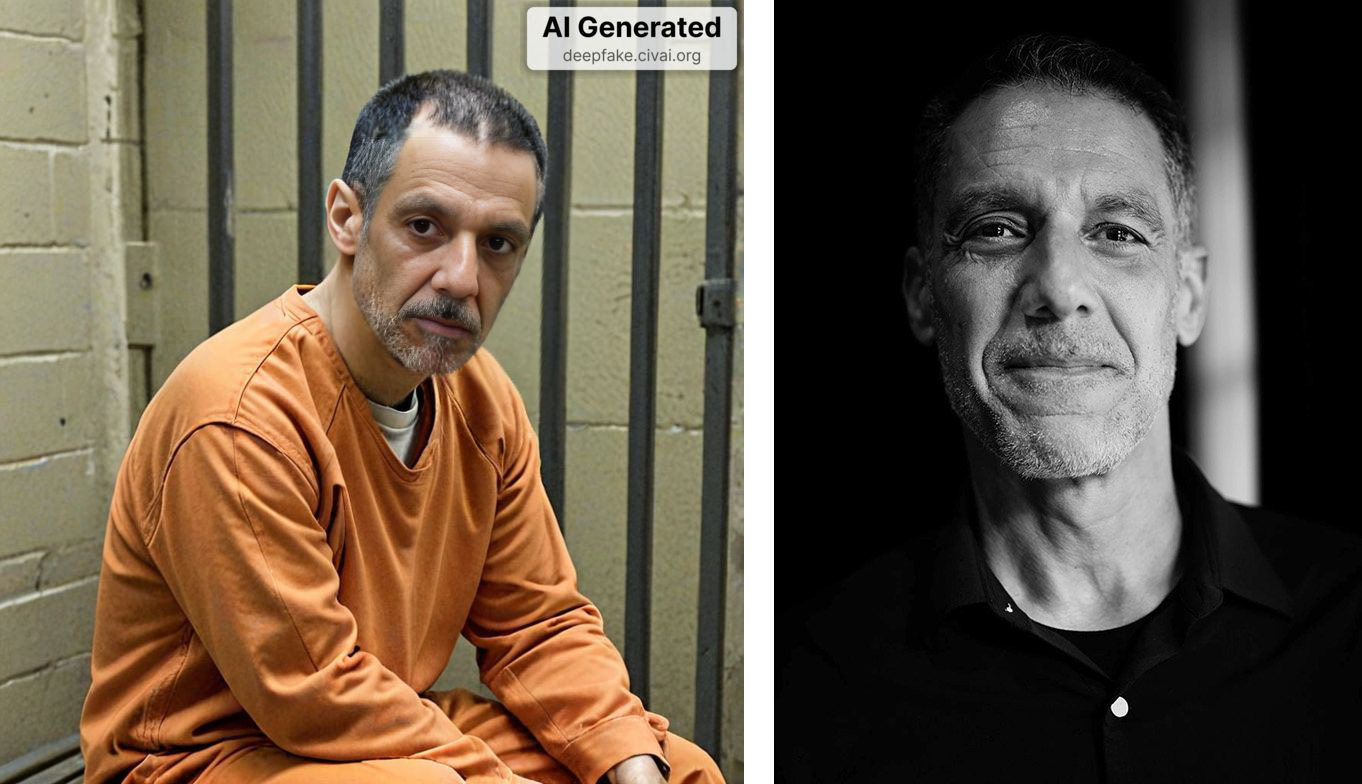

NCII: Before the less objectionable term “generative AI” took root, AI-generated content was referred to as “deepfakes,” a term derived from the moniker of a Reddit user who in 2017 used this nascent technology to create non-consensual intimate imagery (NCII). Seemingly unable to shake off its roots, generative AI continues to be widely used to insert a person's likeness (primarily women and also children) into sexually explicit material that is then publicly shared by its creators as a form of humiliation or extortion. While it used to take thousands of images of a person to digitally insert them into NCII, today only a single image is needed (as shown in the above image of me in prison). This means that the threat of NCII has moved from the likes of Scarlett Johansson, with a large digital footprint, to anyone with a single photo of themselves online.

Fortune 500: AI-powered scams are not just impacting individuals, but they are also coming for small- to large-scale organizations. A finance worker in Hong Kong, for example, was tricked into paying out $25 million to fraudsters using deepfake technology to pose as the company's chief financial officer in a video conference call. This was not the first such example. A United Arab Emirates bank was swindled out of $35 million when the bank teller was convinced to transfer the funds after receiving a phone call from the purported director of a company whom the bank manager knew and with whom he had previously done business. It was later revealed that the voice was that of an AI-synthesized voice made to sound like the director. These incidents are not isolated and are on the rise across all corporate sectors.

Impostor hiring: In July of this year, the FBI released a third warning to the public, private sector, and international community regarding Democratic People's Republic of Korea (North Korea) Information Technology (IT) workers to raise public awareness of the threat posed to US businesses. North Korea is evading US and UN sanctions by targeting private companies to illicitly generate substantial revenue for the regime. North Korean IT workers use a variety of techniques to disguise their identities, including deepfake impersonations.

Protecting yourself

Awareness of a threat is always the first front in any good defense. Consider yourself aware. Beyond awareness, it is important to recognize that today's deepfakes are often nearly indistinguishable from reality, and your visual system will not always be a reliable defense.

On the technology side, we have four weapons in our arsenal: First, Content Credentials (from the C2PA) continue to see wider deployment, including in videos created by Sora. Second, passive forensics techniques that operate in the absence of Content Credentials continue to be developed, although unlike Content Credentials, operating at internet scale continues to be a challenge. Third, regulators both here in the US and abroad are beginning to wake up to the threat of deepfakes with the passage of new regulations to protect consumers and organizations.

And, lastly, there are deepfake detection services. We at GetReal Security, for example, have built technology that can analyze in real time a video and audio call to determine if the person at the other end of the call is who you think they are.

Deepfakes are evolving quickly, and with them an ever-growing shadow of doubt over many things previously assumed to be real. The combination of awareness, technology and policy can still keep authenticity within our grasp.

Author bio: Professor Hany Farid is a world-renowned expert in the field of misinformation, disinformation, and digital forensics. He joined the Content Authenticity Initiative (CAI) as an advisor in June 2023. The CAI is an Adobe-led community of media and tech companies, NGOs, academics, and others working to promote adoption of the open industry standard for content authenticity and provenance. Professor Farid teaches at the University of California, Berkeley, with a joint appointment in electrical engineering and computer sciences at the School of Information. He’s also a member of the Berkeley Artificial Intelligence Lab, Berkeley Institute for Data Science, Center for Innovation in Vision and Optics, Development Engineering Program, and Vision Science Program, and he’s a senior faculty advisor for the Center for Long-Term Cybersecurity. He is also a co-founder and Chief Science Officer at GetReal Labs, where he works to protect organizations worldwide from the threats posed by the malicious use of manipulated and synthetic information.

He received his undergraduate degree in computer science and applied mathematics from the University of Rochester in 1989, his M.S. in computer science from SUNY Albany, and his Ph.D. in computer science from the University of Pennsylvania in 1997. Following a two-year post-doctoral fellowship in brain and cognitive sciences at MIT, he joined the faculty at Dartmouth College in 1999 where he remained until 2019. Professor Farid is the recipient of an Alfred P. Sloan Fellowship and a John Simon Guggenheim Fellowship, and he’s a fellow of the National Academy of Inventors.