September 2024 | This Month in Generative AI: Taylor Swift's AI Fears are Not Unfounded

News and trends shaping our understanding of generative AI technology and its applications.

The past year has seen a continued improvement in the ability of generative AI to create highly photorealistic images. More recently AI-generated video has seen significant breakthroughs in photorealism. See for yourself in this recent online quiz (I scored 8/10).

Not surprisingly, in an election year, this technology has made its way into the world of politics. The day after the Harris-Trump presidential debate, Taylor Swift announced she would be voting for Kamala Harris. In her announcement on Instagram, Swift wrote "Recently I was made aware that Al of me falsely endorsing Donald Trump's presidential run was posted to his site. It really conjured up my fears around Al, and the dangers of spreading misinformation."

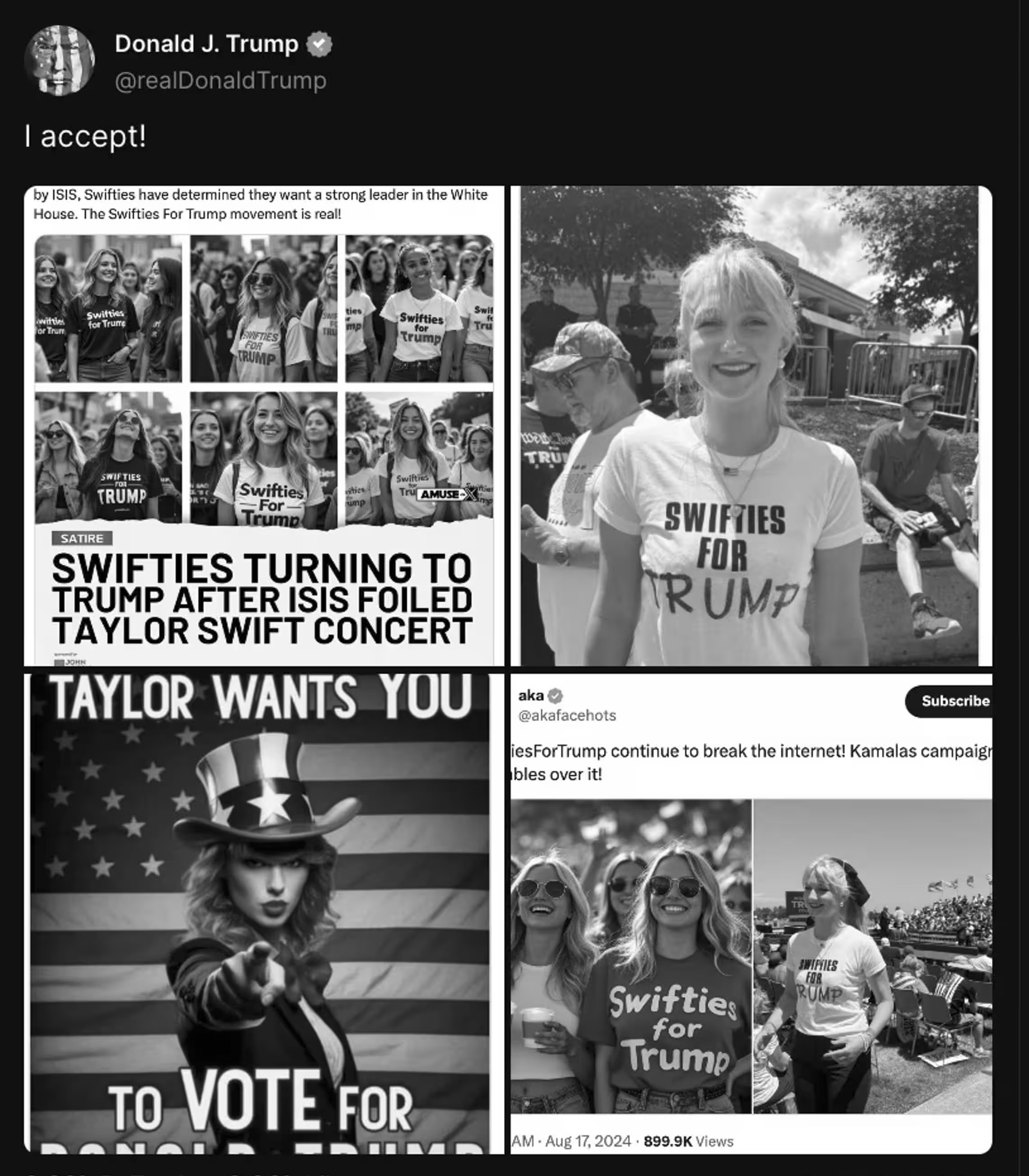

Shown above is a mosaic of the images in question (which I've converted to grayscale to reduce the spread of this misleading montage). The six photos in the upper left were too small to forensically analyze but they have the telltale visual signs of being AI-generated.

Based on the results of two of our models trained to detect traces of AI generation, I found no traces of AI in the image in the upper right or in the image in the lower right (right panel). The models, however, reveal tell-tale signs of AI generation in the image in the lower right (left panel). The image in the lower left is not photographic.

For the two images for which the models found no evidence of AI generation, a secondary shadow and perspective analysis reveals no physical inconsistencies, adding further evidence that they are most likely not AI generated (these images depict the same person). Combining artwork, real and fake photos into a single montage is a way of pushing misleading information.

Swift concluded her endorsement with a simple but eloquent message: "It brought me to the conclusion that I need to be very transparent about my actual plans for this election as a voter. The simplest way to combat misinformation is with the truth."

The fake Swift endorsement is not the only example of AI-powered disinformation in this presidential election. Soon after the Harris-Trump presidential debate on September 10th, Trump amplified baseless claims that Haitian immigrants in Springfield, Ohio were stealing and eating neighbors' pets. Shortly afterwards, a series of fake images began circulating online purporting to show just this. All, of course, were AI generated. This isn't just absurd, it is dangerous. Demonizing a population of immigrants puts their personal safety at risk.

Given that not everyone can (or wants to) distinguish the real from the fake, the creation and distribution of purported visual evidence is at best reckless. And, in the shadow of the devastating hurricane Helene, social media was awash in fake images and conspiracies that officials reported were interfering with rescue and recovery efforts.

While it has always been possible to spread lies and conspiracies, AI-generated content is adding dangerous fuel to an already raging dumpster fire of disinformation, particularly as it is paired with the ease and speed with which lies can spread on social media.

All forms of generative AI–image, audio, and video—are rapidly passing through the uncanny valley, a phenomenon that occurs when a humanoid robot, or an image or video of a computer-generated human, becomes more human-like.

While there is much to be excited about with regards to the democratization of technology that used to only be in the hands of Hollywood studios, we should not ignore the malicious uses of generative AI in the form of non-consensual sexual imagery, child sexual abuse imagery, small- to large-scale fraud, and the fueling of disinformation campaigns.

The fake Swift endorsement was not the first – nor will it be the last – example of AI-generated images being misused in this presidential election. While some generative-AI systems have placed reasonable guardrails on what users can create, X's recently released image generator boastfully removed most of these guardrails, allowing users to create everything from images of Mickey Mouse kicking a child to Harris and Trump romantically embracing.

In the world of generative AI, we as an industry and society are only as good as the lowest common denominator. In this regard, it takes only one player in this field to threaten the good work of the Content Authenticity Initiative and other efforts to mitigate the dangers of generative AI.

We need to continue to develop technologies to distinguish the real from the fake, pass sensible regulation, and educate the public. We know all too well, however, that this will not be enough, so we should all take a page from Taylor Swift and stand up and just tell the truth.

- Subscribe to the CAI newsletter to receive ecosystem news.

- Stay connected and consider joining the movement to restore trust and transparency online.

Author bio: Professor Hany Farid is a world-renowned expert in the field of misinformation, disinformation, and digital forensics. He joined the Content Authenticity Initiative (CAI) as an advisor in June 2023. The CAI is an Adobe-led community of media and tech companies, NGOs, academics, and others working to promote adoption of the open industry standard for content authenticity and provenance.

Professor Farid teaches at the University of California, Berkeley, with a joint appointment in electrical engineering and computer sciences at the School of Information. He’s also a member of the Berkeley Artificial Intelligence Lab, Berkeley Institute for Data Science, Center for Innovation in Vision and Optics, Development Engineering Program, and Vision Science Program, and he’s a senior faculty advisor for the Center for Long-Term Cybersecurity. His research focuses on digital forensics, forensic science, misinformation, image analysis, and human perception.

He received his undergraduate degree in computer science and applied mathematics from the University of Rochester in 1989, his M.S. in computer science from SUNY Albany, and his Ph.D. in computer science from the University of Pennsylvania in 1997. Following a two-year post-doctoral fellowship in brain and cognitive sciences at MIT, he joined the faculty at Dartmouth College in 1999 where he remained until 2019.

Professor Farid is the recipient of an Alfred P. Sloan Fellowship and a John Simon Guggenheim Fellowship, and he’s a fellow of the National Academy of Inventors.