Photo forensics for AI-generated faces

In this era of generative AI, how do we determine whether an image is human-created or machine-made? For the Content Authenticity Initiative (CAI), Professor Hany Farid shares the techniques used for identifying real and synthetic images — including analyzing shadows, reflections, vanishing points, environmental lighting, and GAN-generated faces. A world-renowned expert in the field of misinformation, disinformation, and digital forensics as well as an advisor to the CAI, Professor Farid explores the limits of these techniques and their part in a larger ecosystem needed to regain trust in the visual record.

This is the third article in a six-part series.

More from this series:

Part 1: From the darkroom to generative AI

Part 2: How realistic are AI-generated faces?

by Hany Farid

As I discussed in my previous post, generative adversarial networks (GANs) can produce remarkably realistic images of people that are nearly indistinguishable from images of real people. Here I will describe a technique for detecting GAN-generated faces, such as those typically found in online profiles

Below you will see the average of 400 StyleGAN2 faces (left) and 400 real profile photos from people with whom I am connected on LinkedIn (right).

Because the real photos are so varied, the average profile photo is fairly nondescript. By contrast, the average GAN face is highly distinct with almost perfectly focused eyes. This is because the real faces used by the GAN discriminator are all aligned in the same way, resulting in all the AI-generated faces having the same alignment. It seems that this alignment was intentional as a way to improve generation of more consistently realistic faces.

In addition to having the same facial alignment, the StyleGAN faces also appear from the neck up. By contrast, real profile photos tend to show more of the upper body and shoulders. It is these within-class similarities and across-class differences that we seek to exploit in our technique for detecting GAN-generated faces.

The average of 400 GAN-generated faces (left) and 400 real profile photos (right). (Credit: Hany Farid)

Starting with 10,000 faces generated by each of the three versions of StyleGAN (1, 2, 3), we learn a low-dimensional representation of GAN-generated faces. This learning takes two forms: the classic, linear, principal components analysis (PCA) and a more modern auto-encoder.

Regardless of the underlying computational technique, this approach learns a constructive model that captures the particular properties of StyleGAN-generated faces. In the case of PCA, this model is a simple weighted sum of a small number of images learned from the training images.

Once the model is constructed, we can ask if any image can be accurately reconstructed with this model. The intuition here is that if the model captures properties that are specific to GAN faces, then GAN faces will be accurately reconstructed with the model but real photos (that do not share these properties) will not.

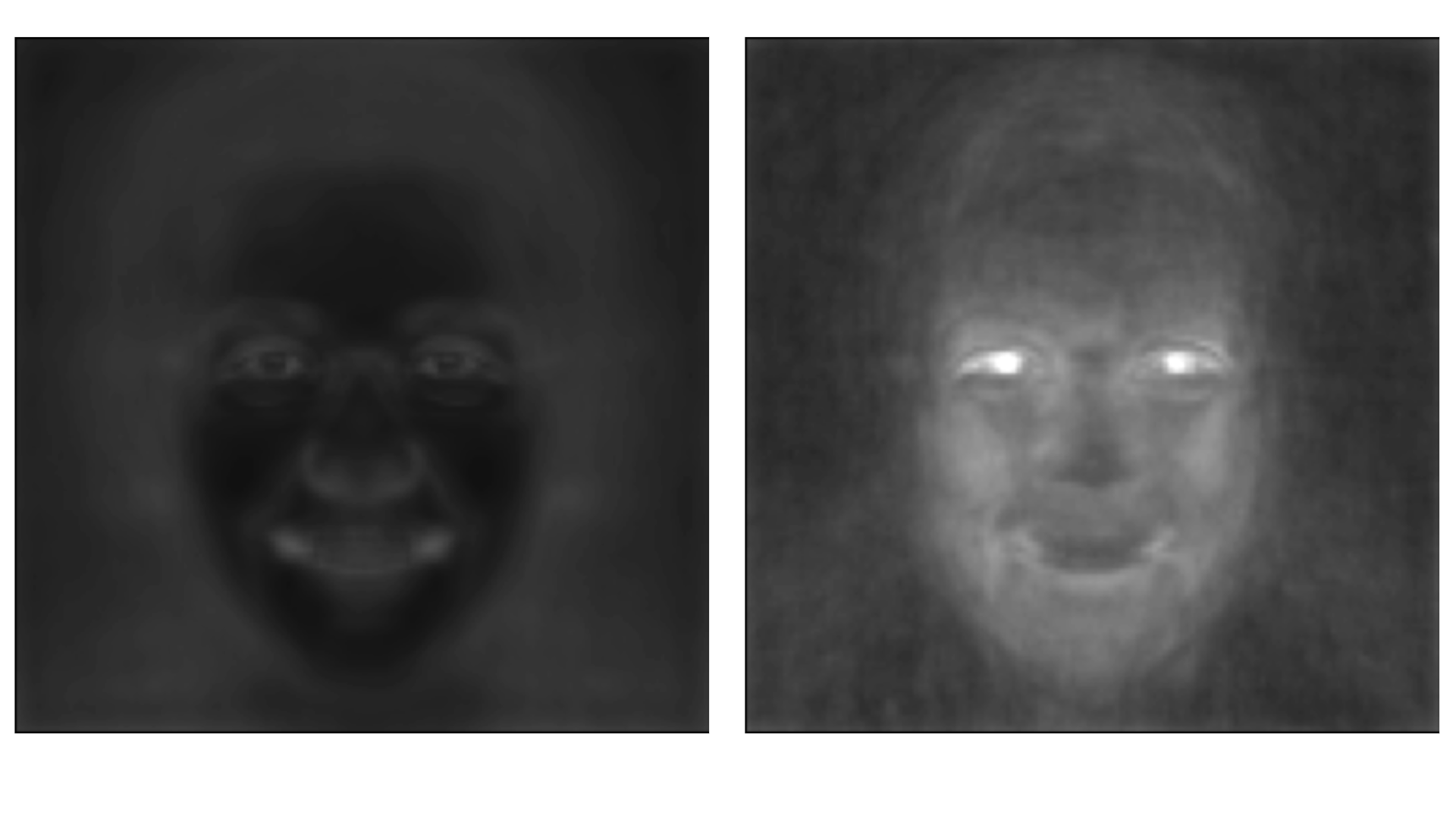

Below you will see the average reconstruction error for 400 GAN faces (left) and 400 real faces (right). They are displayed on the same intensity scale where, the brighter the pixel value, the larger the reconstruction error. Here you will see that the real faces have significantly higher reconstruction errors throughout, particularly around the eyes.

The average reconstruction error for 400 GAN-generated faces (left) and 400 real profile photos (right). (Credit: Hany Farid)

By simply computing this reconstruction error, we can build a fairly reliable classifier to determine whether a face was likely to have been generated by StyleGAN. We can make this classifier even more reliable by analyzing the eiGAN-face model weights needed for the full reconstruction.

Using this approach, we can detect more than 99% of GAN-generated faces, while only misclassifying 1% of photographic faces — those that happen to match the position and alignment of GAN faces.

Any forensic technique is, of course, vulnerable to attack. In this case, an adversary could crop, translate, and/or rotate a GAN-generated face in an attempt to circumvent our detection. We can, however, build this attack into our training by constructing our model not just on GAN faces, but also on GAN faces that have been subjected to random manipulations. (See the article in the Further Reading section below for more details on this attack and defense.)

No defense will ever be perfect, so the forensic identification cannot rely on just one technique. It must instead rely on a suite of techniques that examine different aspects of an image. Even if an adversary does not find a way to circumvent this particular detection, newer diffusion-based, text-to-image synthesis techniques (e.g., Dall-E, Midjourney, and Adobe Firefly) do not exhibit the same facial alignment as GAN-generated faces. As a result, they will fail to be detected by this specific technique. In my next post, I will describe a complementary forensic analysis that is more effective at detecting faces generated using diffusion-based techniques.

Further reading:

[1] S. Mundra, G.J.A. Porcile, S. Marvaniya, J.R. Verbus and H. Farid. Exposing GAN-Generated Profile Photos from Compact Embeddings. Workshop on Media Forensics at CVPR, 2023.

More from this series:

Part 1: From the darkroom to generative AI

Part 2: How realistic are AI-generated faces?

Author bio: Professor Hany Farid is a world-renowned expert in the field of misinformation, disinformation, and digital forensics. He joined the Content Authenticity Initiative (CAI) as an advisor in June 2023. The CAI is a community of media and tech companies, non-profits, academics, and others working to promote adoption of the open industry standard for content authenticity and provenance.

Professor Farid teaches at the University of California, Berkeley, with a joint appointment in electrical engineering and computer sciences at the School of Information. He’s also a member of the Berkeley Artificial Intelligence Lab, Berkeley Institute for Data Science, Center for Innovation in Vision and Optics, Development Engineering Program, and Vision Science Program, and he’s a senior faculty advisor for the Center for Long-Term Cybersecurity. His research focuses on digital forensics, forensic science, misinformation, image analysis, and human perception.

He received his undergraduate degree in computer science and applied mathematics from the University of Rochester in 1989, his M.S. in computer science from SUNY Albany, and his Ph.D. in computer science from the University of Pennsylvania in 1997. Following a two-year post-doctoral fellowship in brain and cognitive sciences at MIT, he joined the faculty at Dartmouth College in 1999 where he remained until 2019.

Professor Farid is the recipient of an Alfred P. Sloan Fellowship and a John Simon Guggenheim Fellowship, and he’s a fellow of the National Academy of Inventors.