How realistic are AI-generated faces?

In this era of generative AI, how do we determine whether an image is human-created or machine-made? For the Content Authenticity Initiative (CAI), Professor Hany Farid shares the techniques used for identifying real and synthetic images — including analyzing shadows, reflections, vanishing points, environmental lighting, and GAN-generated faces. A world-renowned expert in the field of misinformation, disinformation, and digital forensics as well as an advisor to the CAI, Professor Farid explores the limits of these techniques and their part in a larger ecosystem needed to regain trust in the visual record.

This is the second article in a six-part series.

More from this series:

Part 1: From the darkroom to generative AI

Part 2: How realistic are AI-generated faces?

Part 3: Photo forensics for AI-generated faces

Part 4: Photo forensics from lighting environments

Part 5: Photo forensics from lighting shadows and reflections

Part 6: Passive versus active photo forensics in the age of AI and social media

Before we dive into talking about specific analyses that can help distinguish the real from the fake, it is worthwhile to first ask: Just how photorealistic are AI-generated images? That is, upon only a visual inspection, can the average person tell the difference between a real image and an AI-generated image? Because the human brain is so finely sensitive to images of people and faces, we will focus on the photorealism of faces, arguably the most challenging task for AI-generation.

Before diffusion-based, text-to-image synthesis techniques like DALL-E, Midjourney, and Adobe Firefly splashed onto the screen, generative adversarial networks (GANs) were the most common computational technique for synthesizing images. These systems are generative, because they are tasked with generating an image; adversarial, because they pit two separate components (the generator and the discriminator) against each other; and networks, because the computational machinery underlying the generator and discriminator are neural networks.

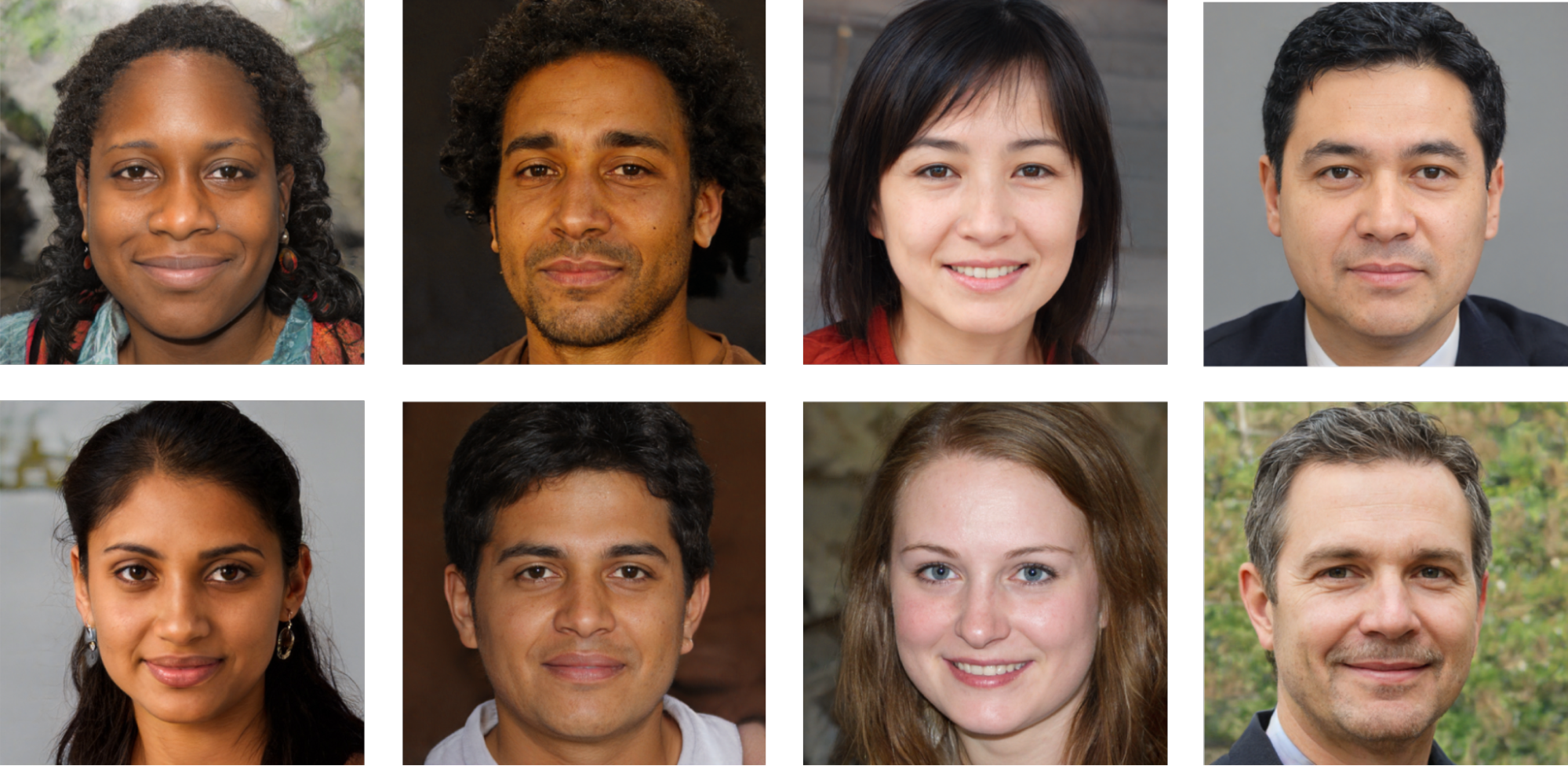

Although there are many complex and intricate details in these systems, StyleGAN (and GANs in general) follow a fairly straightforward structure. When tasked with creating a synthesized face, the generator starts by splatting down a random array of pixels, and then it feeds this first guess to the discriminator. If the discriminator, equipped with a large database of real faces, can distinguish the generated image from the real faces, the discriminator provides this feedback to the generator. The generator then updates its initial guess and feeds this update to the discriminator in a second round. This process continues with the generator and discriminator competing in an adversarial game until an equilibrium is reached when the generator produces an image that the discriminator cannot distinguish from real faces. Above you can see a representative set of eight GAN-generated faces.

In a series of recent perceptual studies, we examined the ability of trained and untrained observers to distinguish between real and synthesized faces. The synthetic faces were generated using StyleGAN2, ensuring diversity across gender, race, and apparent age. For each synthesized face, a corresponding real face was matched in terms of gender, age, race, and overall appearance.

In the first study, 315 paid online participants were shown — one at a time — 128 faces, half of which were real. The participants were asked to classify each as either real or synthetic. The average accuracy on this task was 48.2%, close to chance performance of 50%, with participants equally as likely to say that a real face was synthetic as vice versa.

In a second study, 219 new participants were initially provided with a brief training on examples of specific rendering artifacts that can be used to identify synthetic faces. Throughout the experiment, participants were also provided with trial-by-trial feedback informing them whether their response was correct. This training and feedback led to a slight improvement in average accuracy, from 48.2% to 59.0%.

While synthesized faces are highly realistic, there are some clues that can help distinguish them from real faces:

StyleGAN-synthesized faces have a common structure consisting of a mostly front-facing person from the neck up and with a mostly uniform or nondescript background.

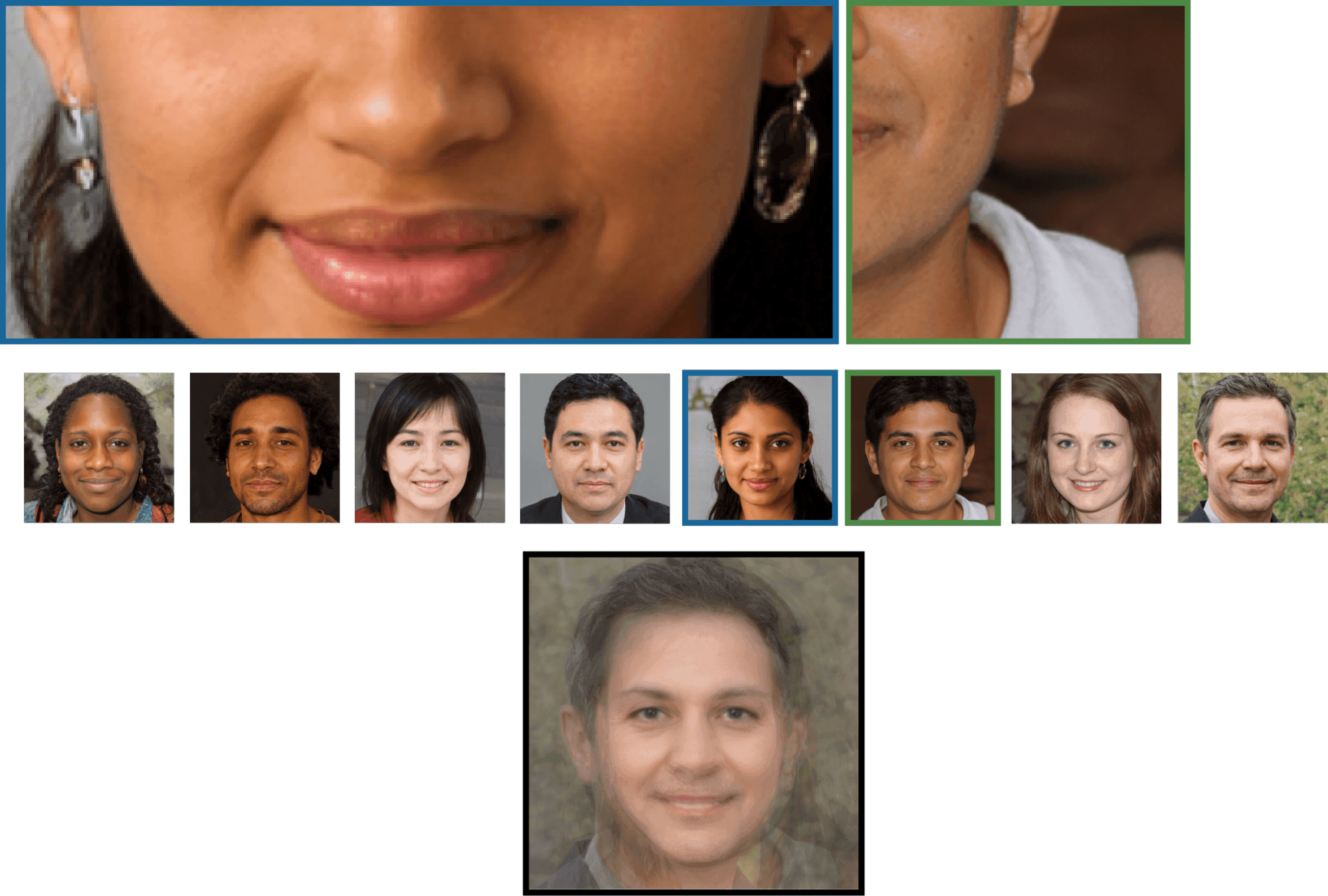

Facial asymmetries are telltale signs of synthetic faces. They are often seen in mismatched earrings or glasses frames. (See the top-left image in the figure below.)

When viewing a face, we tend to focus most of our attention in a "Y" pattern, shifting our gaze between the eyes and mouth and often missing some glaring artifacts in the background that may contain physically implausible structures. (See the top-right image in the figure below.)

StyleGAN-synthesized faces enforce a facial alignment in the training and synthesis steps that results in the spacing between the eyes being the same and the eyes being horizontally aligned in the image. (See the bottom image in the figure below.)

Eight GAN-generated faces (middle); a magnified view of the fifth face (top left) revealing asymmetric earrings; a magnified view of the sixth face (top right) revealing a stranger synthesis artifact on the shoulder; and an average of all eight faces (bottom) revealing that the eyes in each face are aligned to the same position and interocular distance. (Credit: Hany Farid)

Having largely passed through the uncanny valley, GAN-generated faces are nearly indistinguishable from real faces. Although we have not carried out formal studies, my intuition is that the more recent diffusion-based, text-to-image generation yields faces that are slightly less photorealistic. As this technology improves, however, it will surely lead to images that also pass through the uncanny valley.

Having seen that the visual system may not always be reliable in distinguishing the real from the fake, in my next post I will describe our first technique that exploits the facial alignment of GAN-generated faces to distinguish them from real faces.

Further reading:

[1] S.J. Nightingale and H. Farid. AI-Synthesized Faces are Indistinguishable from Real Faces and More Trustworthy. Proceedings of the National Academy of Sciences, 119(8), 2022.

More from this series:

Read Part 1: From the darkroom to generative AI

Author bio: Professor Hany Farid is a world-renowned expert in the field of misinformation, disinformation, and digital forensics. He joined the Content Authenticity Initiative (CAI) as an advisor in June 2023. The CAI is a community of media and tech companies, non-profits, academics, and others working to promote adoption of the open industry standard for content authenticity and provenance.

Professor Farid teaches at the University of California, Berkeley, with a joint appointment in electrical engineering and computer sciences at the School of Information. He’s also a member of the Berkeley Artificial Intelligence Lab, Berkeley Institute for Data Science, Center for Innovation in Vision and Optics, Development Engineering Program, and Vision Science Program, and he’s a senior faculty advisor for the Center for Long-Term Cybersecurity. His research focuses on digital forensics, forensic science, misinformation, image analysis, and human perception.

He received his undergraduate degree in computer science and applied mathematics from the University of Rochester in 1989, his M.S. in computer science from SUNY Albany, and his Ph.D. in computer science from the University of Pennsylvania in 1997. Following a two-year post-doctoral fellowship in brain and cognitive sciences at MIT, he joined the faculty at Dartmouth College in 1999 where he remained until 2019.

Professor Farid is the recipient of an Alfred P. Sloan Fellowship and a John Simon Guggenheim Fellowship, and he’s a fellow of the National Academy of Inventors.