Blog

Content Authenticity Initiative Presents: Video Provenance and the Ethics of Deepfakes

A recap of our June members’ event.

By Chrysanthe Tenentes, CAI Head of Content

Malicious and pernicious deepfakes are just one kind of synthetic media; other valid, benign, and most notably, creative uses now also abound. The mainstream narrative that deepfakes are dangerous is only one part of the story. While fully aware of the potential consequences of bad actors, we looked to move past a one-sided perspective on the synthetic future and focused on the ethics, technology, and social shifts involved during our event “Video Provenance and the Ethics of Deepfakes” at the end of June.

In his opening welcome, CAI Director Andy Parsons recognized new members including AFP, Camera Bits, Ernst Leitz Labs (a division of Leica), France Télévisions, Reface, Reynolds Journalism Institute, and The Washington Post. Membership and interest in our work continue to grow; see the full CAI member list on our newly relaunched website: contentauthenticity.org. Andy also announced that the C2PA standards organization will release a public draft of its technical specifications later this year. (Read more about the group’s founding here and news here.) These open standards will power the layer of objective facts on the internet that our technology and provenance work centers around — and will cover both still images and video.

Panel moderator Nina Schick used this timely news to frame a broad conversation with VFX artist and Metaphysic.ai cofounder Chris Ume, Synthesia founder Victor Riparbelli, and video expert Vishy Swaminathan of Adobe Research. The group discussed the ethics involved in creation of synthetic video and how to shift public perception away from negative bias against synthetic forms of media. Nina provided a look at both the synthetic present and future to focus beyond the real and potent risk of malicious deepfakes onward to the fast-approaching ubiquity of synthetic media.

The synthetic future will change the way we interact with all digital content and will be an AI-led paradigm change in content production, human communication, and information perception. Hyperrealistic AI-produced videos will be increasingly ubiquitous in our information ecosystem (think: panelist Chris Ume’s Deep Tom Cruise videos). Victor pushed this further. He predicts that by 2025, 95% of the video content we see online will be synthetically generated. This opens up infinite possibilities, and a democratization of content production limited only by our creativity and imagination. Instead of needing professional camera equipment and complex production tools, you’ll be able to create realistic video with only your personal computer and approachable software. It may be as easy to create photorealistic, synthetic video as it is to write an email.

Chris added that there is a misunderstanding of how easy it is to create these hyperrealistic videos today. His Tom Cruise work took months to create even with advanced technology and a talented actor. His new company, Metaphysic.ai, is building a set of AI tools so brands and creators can access synthetic media in a way that is simple, ethical, and responsible. Vishy reminded us that produced video has mixed natural and synthetic media for years. What used to be expensive post-production tools are now broadly available. There is a higher creative potential for the end user, but this can also reduce trust in videos as as a source of truth. This is where standardized measures around video authenticity can bolster trust while still enabling creativity.

This kicked off a lively discussion around the need for provenance solutions within video, (rather than detection methods alone.) Video is going through the same evolution process as photography; 99.9% of photos we see online have been edited in some way, so mere detection of alteration is not enough of a signal to fully understand a piece of content. Victor noted that a provenance solution goes deeper than detection. Much of the problematic content online now is actually a real video that has been misrepresented as being from a different time and place. Provenance can provide a scalable way to debunk “cheapfakes” which are at present a far more widespread problem than deepfakes. There was a wide-ranging Q&A session with attendee questions to close out the hour. As always, there was not nearly enough time to address all the excellent questions posed, but we’ll make sure areas of greatest interest are covered in future gatherings.

We rely on the voices and participation of our diverse community to ensure we’re addressing the most important topics and technologies in the world of digital content provenance. We ask that all CAI members and interested parties fill out the quick survey here: adobe.ly/CAIsurvey, which we’ll use to guide upcoming event programming and new member offerings. If you are not yet a member of the CAI, you can join us in our free, inclusive membership here. If you’re already a member, we encourage you to share the membership page with any interested colleagues. We welcome all to join our broad coalition of researchers, academics, hardware and software companies, publishers, journalists, and creators.

The Washington Post joins as a member of the CAI

The noted journalism powerhouse joins the CAI community, now with more than 250 members.

By Santiago Lyon, CAI Head of Education & Advocacy

As the CAI community grows, now with over 250 members, we’re delighted to welcome the venerable Washington Post to the group.

The Post is a noted journalism powerhouse that produces award-winning investigations that hold the powerful to account, long-form narratives that illuminate the stories and experiences of people around the world, and high-impact multimedia projects that push the boundaries of audio and visual storytelling today.

It’s also a place of tremendous investment, growing its newsroom to more than 1,000, adding first-of-its-kind teams like one focused on visual forensics, and bringing together engineers and journalists to ensure a superior experience across platforms for readers.

As a result, The Post’s audience continues to grow with nearly 88 million unique visitors in March of this year, a testament to its reach and breadth of coverage.

This large audience makes The Post an important addition to the CAI’s work around content authenticity and provenance (alongside The New York Times and the Gannett newspaper chain of 260 daily newspapers in the United States).

With a hyper-modern newsroom and an expanding international footprint, The Post will be a valuable partner in prototyping CAI technology and eventually implementing it to help readers better understand the provenance of images and video, further buttressing The Post’s already sterling reputation for first class, award-winning journalism.

As we further our technical work internally, we’ll be looking to collaborate on more real-world use case prototypes. This will be crucial in our mission to help give consumers more transparency around image veracity. If you’re interested in partnering on prototypes, reach out to our team here.

Twitter joins the C2PA steering committee, furthering their commitment to scale trust in online content

Twitter adds their strong technical expertise to the growing alliance of companies as the first social media platform to participate in our open standards specification work in progress.

By Andy Parsons, Content Authenticity Initiative Director

Today, our longtime partner and supporter Twitter continues to lead the way as a trailblazing platform in social media by joining the Coalition for Content Provenance and Authenticity (C2PA). Twitter will join Adobe, Arm, BBC, Intel, Microsoft, and Truepic on the C2PA steering committee to further the work being done to scale transparency and trust in online content.

Twitter has long believed in our goal of creating an end-to-end content provenance system at scale, having been essential co-authors of our foundational technical white paper last summer. Their misinformation product manager Fabiana Azevedo spoke as a key voice at our December community event last year, focused on restoring trust in the age of nonstop information at our fingertips, with increasing amounts of it being mis- or disinformation. As open standards specification work shifts to the newly established C2PA organization, Twitter joining to bring their strong technical expertise marks a critical step forward for the community and ecosystem of content provenance.

Claire Leibowicz, head of the AI and Media Integrity Program at The Partnership on AI, wrote recently in her excellent piece in Tablet Magazine on the urgency of countering misinformation on social media: “By rigorously field-testing different approaches, social media platforms will be able to better determine what works and what doesn’t for any future provenance information infrastructure. More broadly, platforms should work alongside others in industry, civil society, and academia to embed additional signals of authenticity into the web.” This is precisely what both the C2PA and CAI aim to do with camera manufacturers, software companies, human rights groups, publishers, and social media platforms and what the addition of Twitter to the C2PA will further.

As previously announced, the C2PA is a Joint Development Foundation project formed to create open technical standards through a formal coalition of cross-industry companies. In a few short months, the C2PA has made significant headway: we continue to expand the broad group of contributing members, to establish relationships with complementary standards organizations, and to create specialized working groups with the aim of publicly releasing draft specifications later this year. Interested parties can apply to participate in the C2PA here. As the CAI foundational specification work is incubated under the C2PA, the CAI increases our focus on education, advocacy, collaborative prototyping of real-world use cases, and interoperability of tools to realize our vision of widely-adopted, cryptographically verifiable provenance.

Through both C2PA standards development and CAI community activities, we will together build a robust, cross-industry provenance solution to address misinformation. Twitter’s participation as a leader in the efforts, cemented today with C2PA membership, marks an important milestone on the journey to empowering consumers with transparency.

New York Times R&D Team Launches a Pragmatic Use Case for CAI Technology in Journalism

“The more people are able to understand the true origin of their media, the less room there is for ‘fake news’ and other deceitful information. Allowing everyone to provide and access media origins will protect against manipulated, deceptive, or out-of-context online media.”

By Santiago Lyon, Head of Advocacy & Education

Building off their previous work in the News Provenance Project, the New York Times R&D team has partnered with the CAI on a prototype exploring tools to give readers transparency into the source and veracity of news visuals.

Popular wisdom used to tell us that “a photograph never lies” and that we should trust in the still image. Nowadays, soaked in information, much of it visual, it’s become much more complicated to distinguish fact from fiction, whether from family and friends or professional media.

In addition, we sometimes receive doctored images, conspiracy theories, and fake news from outlets disguised as news organizations; content produced to further an agenda or simply to make money from advertising, should it go viral.

Regardless of source, images are plucked out of the traditional and social media streams, quickly screen-grabbed, sometimes altered, posted and reposted extensively online, usually without payment or acknowledgement and often lacking the original contextual information that might help us identify the source, frame our interpretation. and add to our understanding.

So how are we to make sense of this complex and sometimes confusing visual ecosystem?

“The more people are able to understand the true origin of their media, the less room there is for ‘fake news’ and other deceitful information. Allowing everyone to provide and access media origins will protect against manipulated, deceptive, or out-of-context online media.”

At the Content Authenticity Initiative (CAI), a community of major technology and media companies, we’re working to develop an open industry standard that will allow for more confidence in the authenticity of photographs (and then video and other file types). We are creating a community of trust, to help viewers know if they can believe what they see.

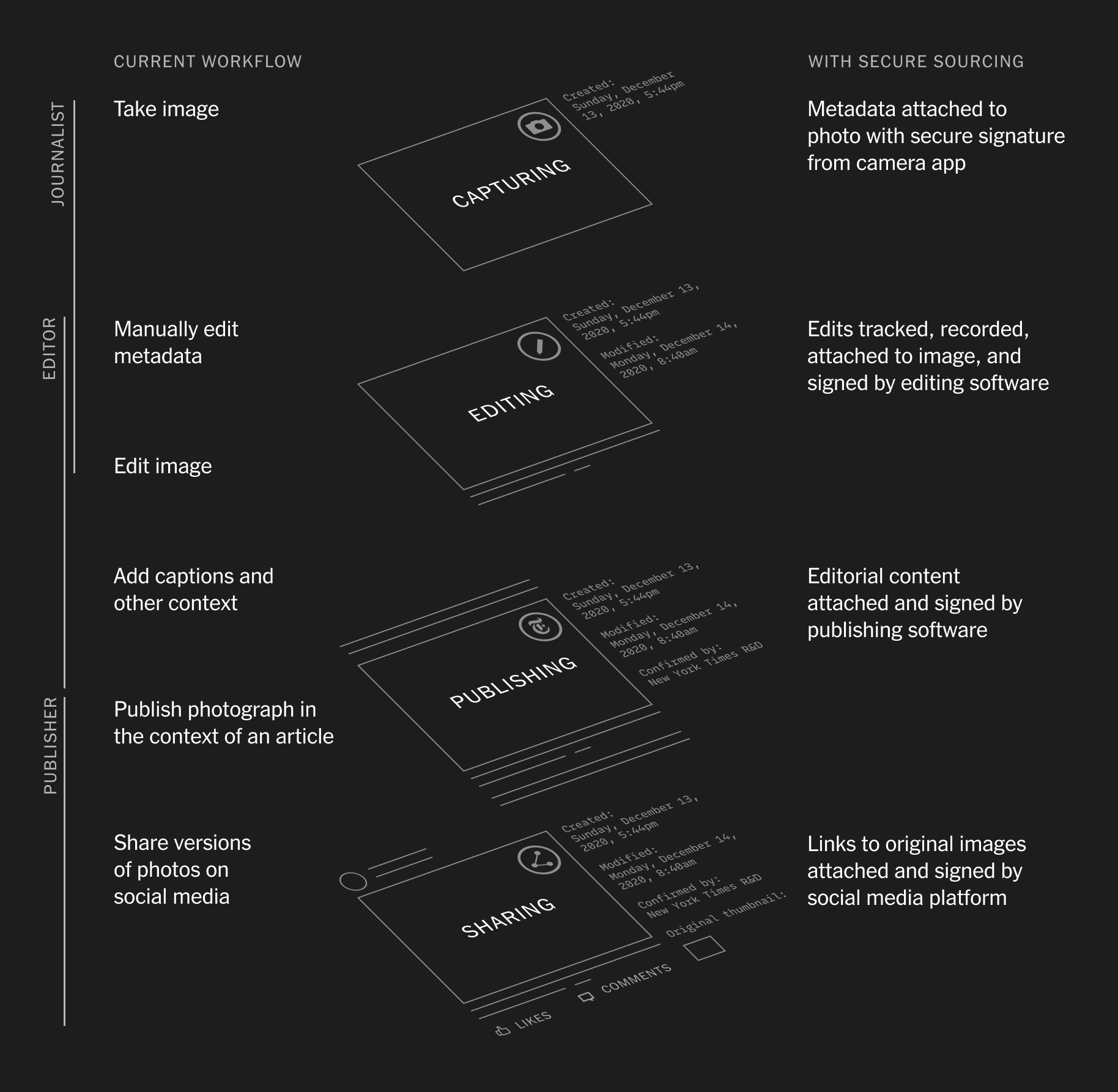

The New York Times Co., a founding member of the CAI (along with Adobe and Twitter) today unveiled a prototype of this system of end-to-end secure capture, edit, and publish.

This example on the NYT R&D site shows what they call “secure sourcing,” using a Qualcomm/Truepic test device with secure capture, editing via Photoshop, and publishing via their Prismic content management system.

This important work demonstrates how a well-respected news outlet like the NYT is experimenting with CAI technology, giving us a hint of what’s possible at scale. This aligns with our goal of displaying a CAI logo next to images published in traditional or social media that gives the consumer more information about the provenance of the imagery, such as where and when it was first created and how it might have been altered or edited.

See an example of these important metadata displayed below on the R&D site, on the info panel at right.

The NYT R&D work is a further iteration of the technology that we detailed in our first case study here. Using the same Qualcomm/Truepic test device as photographer Sara Lewkowicz did for us in November 2020 with secure camera capture, the NY Times R&D team created images with their own provenance data. With early access to the CAI software developer kit, they were able to securely display provenance immutability sealed to their images, that also reflect edits made in the prerelease version of Photoshop.

Seeing this secure system in place in a prototype from the New York Times Co. is a major step forward in the work we’ve forged with our community of major media and technology companies, NGOs, academics, and others working to promote and drive adoption of an open industry standard around content authenticity and provenance.

Having spent 10 years as a war photographer, followed by 13 years as director and VP of photography for the Associated Press, I see urgent need for adoption of this open industry standard around content authenticity and provenance.

This will bolster trust in content among both consumers and capture partners (such as Qualcomm and Truepic), editing partners (in this case, our colleagues at Adobe Photoshop), and publishers, such as the New York Times and others.

As the 78 Days project from Starling Lab and Reuters initially showed, we now have another pragmatic use case and proof of concept of our work.

The Future of Trust in Photojournalism: CAI Members’ Event Recap

A look at our spring event, featuring Lucy Nicholson of Reuters and Jonathan Dotan of Stanford University and the Starling Project, hosted by Santiago Lyon and Andy Parsons.

By Chrysanthe Tenentes, CAI Head of Content

On March 31, we hosted our first members’ community event (virtually, of course): The Future of Trust in Photojournalism. The highlight was a fantastic conversation featuring Reuters photojournalist Lucy Nicholson and Stanford Center for Blockchain Research fellow and Starling Project co-founder Jonathan Dotan. Our head of Education and Advocacy, Santiago Lyon, led the conversation that centered around the work Reuters and the Starling Project did starting in March 2020 through the release of the 78 Days secure photojournalism archive in February 2021.

Jonathan laid out the work of the Starling Project (you can find much more on their website, which does an excellent job of explaining the technology behind the end-to-end process they tested through the 78 Days project). The Starling team also prototyped the process of capture-to-publish with Reuters photojournalists covering the California primaries in March 2020 and developed a process of secure capture (on mobile phones, as well as a Canon DSLR), distributed storage, and verification of images. Lucy Nicholson was one of the photographers who contributed and whose work inspired the resulting 78 Days project, shot from the time between the November 2020 election to the transfer of power on Inauguration Day in January 2021.

Jonathan Dotan of Stanford University and Starling Lab describes the Capture | Store | Verify process used in the 78 Days prototype.

Lucy weighed in from the perspective of a working photojournalist with a compelling case for why a new layer of trust is needed within visual content, now more than ever before. She explained, “As documentary photojournalists, we try to represent the truth of news events—and the visual context of news events—as best as we can. We typically do that by sharing a wide range of photographs, to show context, and to allow an audience to make up their minds about something. Disinformation, up until now, has had abstract effects on our lives as photojournalists. In the last year in particular, it’s had very tangible effects on journalists’ physical safety.”

A selection of the images with secure metadata from the 78 Days photojournalism archive from Reuters and the Starling Lab.

Attaching secure metadata to photographs “gives some integrity to the photo and helps us with our task of explaining to the public the value of news photojournalism. We are ambassadors of media literacy. That has become our job; it never used to be. It’s imperative that we all play a part in that.” Lucy added this valuable context. She followed up by saying that it is important to discover and use “any technical tools that can help retain the integrity of photojournalism and uphold the responsibility we have to show people we photograph and document in a vulnerable place in their lives we’re trustworthy.” We need to reestablish trust in photojournalism, not just for consumers and readers, but for the subjects of news at home and abroad. It is a human rights issue.

Jonathan reiterated the importance of protecting photojournalists and laid out two techniques to do so through the Starling project’s findings. First, there is a way to digitally sign data so we can prove the photo was taken in a certain time and place (should the photographer choose to disclose those—which is a reminder that privacy is one of the underlying principles of the CAI). The other form of protection is to seal photos on a camera device itself, in case the camera is seized or the photographer is arrested. For both reasons, it is crucial to have camera manufacturers involved in our work. As Jonathan pointed out, “phones have more advanced cryptographic tools than most camera devices. Consumer hardware can bridge the gap of what professional cameras cannot do right now. And this all should remain open source, so code is available to all. Starling, along with other human rights organizations, feels very strongly about this so that these tools will not be turned into tools of surveillance.” Open technology coupled with a layer of ethics is essential for protecting photojournalists.

A crucial part of the work that the CAI is doing centers around convening “all the right players,” as Jonathan mentioned. He went on to say, “How extraordinary it is that everyone who has a stake in this—the commercial side, NGOs, academics, and more, are all in the same room here. We have an incredible opportunity to shape this dialogue within the CAI, which means it’s not just a catalyst for technical development, but also work around user literacy as we capture the transition from unauthenticated photographs to authenticated photos.” We at the CAI absolutely agree and are focused on continuing this kind of community facilitation and the prototype support we gave to Reuters and the Starling Project and look forward to similar collaborations in the future. If you are interested in being involved, from any stage of the capture-to-publish process, please reach out (see below).

As CAI director Andy Parsons mentioned in closing the event, no single organization can build the crucial layer of trust on the internet. In founding the C2PA, Adobe is furthering its commitment to open standards while the growing, diverse CAI community furthers the mission with discourse, prototypes, and input to guide. We at the CAI are proud of what we have done so far with emerging standards, but we have a long journey of development ahead. We welcome diverse use cases, ideas, and collaboration. To connect, reach out to us via email. And look forward to C2PA draft specifications being publicly available later this year.

We fielded so many thoughtful questions at The Future of Trust in Photojournalism—beyond what we were expecting—so thanks all for being so engaged! We're folding in many of these to the FAQ section on our site, which we’re in the process of updating. More on that soon.

Top left: CAI Head of Education & Advocacy Santiago Lyon; bottom left: CAI Director Andy Parsons; middle: Reuters senior photojournalist Lucy Nicholson; right: Stanford University fellow and co-founder of Starling Project Jonathan Dotan.

Plans are under way for our next event, which will be open to members of the Content Authenticity Initiative. If you are not yet a member of the CAI, you can join us in our free, inclusive membership here. If you’re already a member, we encourage you to share the membership page with any interested colleagues or collaborators.

NFTs & Provenance: How CAI protects creators and collectors alike

Imagine strolling through downtown Manhattan in New York City and happening upon an art gallery. You walk inside and immediately see a piece that catches your eye. Could this really, truly be a Rothko?

By Will Allen and Andy Parsons

Illustrations by Pia Blumenthal

Imagine strolling through downtown Manhattan in New York City and happening upon an art gallery. You walk inside and immediately see a piece that catches your eye. Could this really, truly be a Rothko? Unsure, you ask the gallery manager. They respond with confidence: “We have an exact record of everyone who has ever bought or sold this artwork.”

At first, you can’t believe your luck. A Rothko! But then your college philosophy classes kick in: did that response really answer your question? Is knowing everyone who has ever bought and sold a painting the same as knowing who painted the painting?

No, it isn’t. Welcome to the world of NFTs.

If you are unfamiliar with cryptoart and non-fungible tokens, take a few minutes and read these excellent overviews here, here, and here.

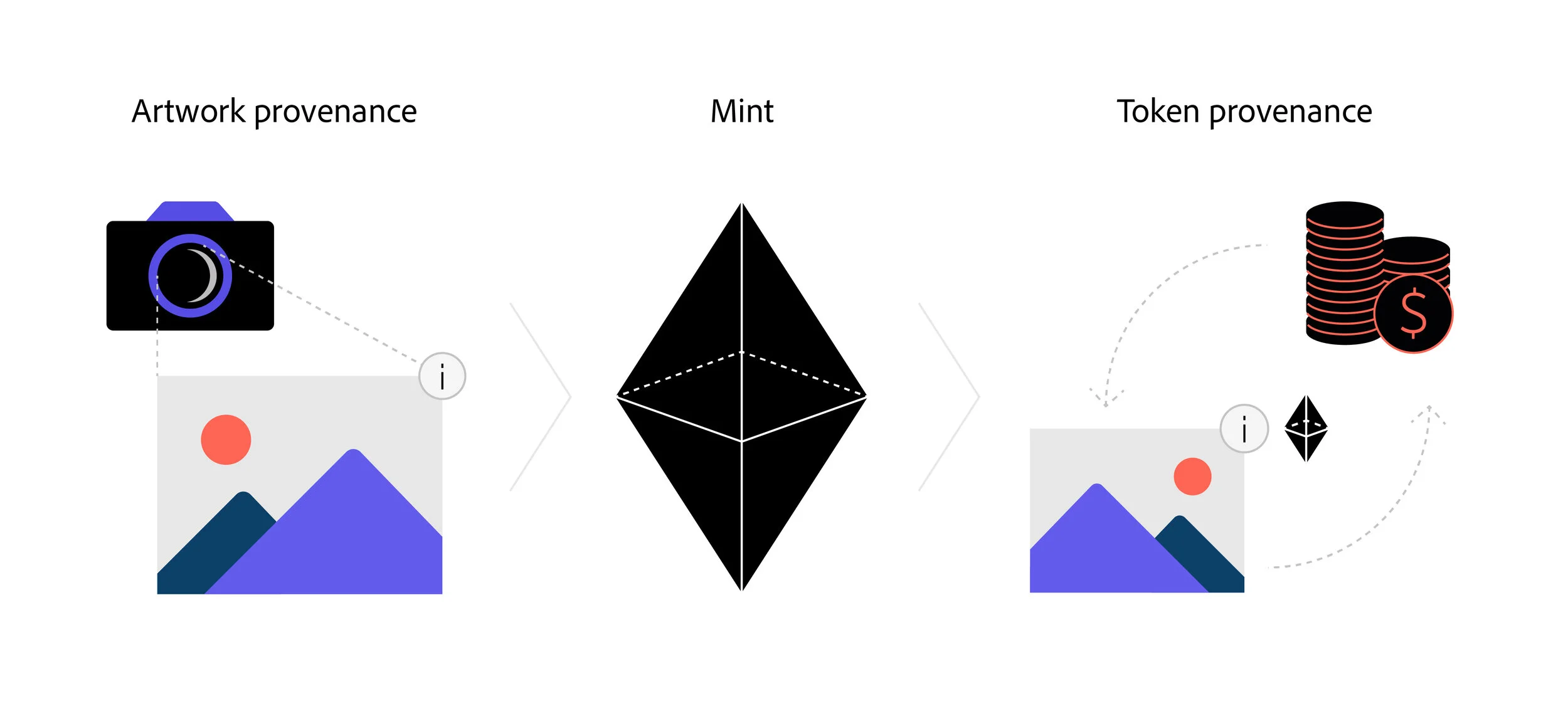

NFTs provide a public, verifiable audit trail of everyone who has bought, sold, or transferred the token. Any changes in ownership of the token are immutably recorded. Despite some of the real issues in the current landscape (such as the environmental footprint), that is a remarkable innovation.

Yet NFTs, as they are structured today, provide this provenance trail only as far back as the moment the token was minted. Knowing everyone who has bought and sold a piece of art doesn’t necessarily tell you who actually created it or if it is genuine. Albert Wenger correctly notes that in the NFT ecosystem, one of the most important problems to be solved is “around people asserting authorship to works they didn’t create.” If the value of NFTs continues to grow, the number of fraudulent sales of inauthentic art pieces could grow as well.

Though semantic purists will bristle at this suggestion, you can think of it as token provenance as distinct from artwork provenance. The artwork an NFT references requires its own parallel, connected history of creation.

This matters for two big reasons. First, for the creator, what is preventing a scammer from listing their work and reselling it to unsuspecting collectors? And secondly, for the collector, how do they know the artwork they are purchasing was actually created by the artist?

Solving the provenance problem

We propose a solution: combining the attribution standards we’ve built through the Content Authenticity Initiative with the token provenance inherent in NFTs. In the open standard the CAI has been building, a creative work has cryptographically verifiable, tamper-evident metadata attached to it with claims about who created that asset and how it was created or edited.

Today, an NFT contract links to the underlying digital art, but the file of the digital art doesn't tell you anything verifiable about its provenance. By utilizing CAI attribution standards, we can now do both. The NFT continues to show token provenance, but now the digital art itself has tamper-evident, cryptographically verified artwork provenance.

How CAI code can protect creators in an NFT world

This technique could emerge in two phases. Initially, embedding provenance data in the asset being minted will ensure that the buyer of the NFT will have access to the verifiable history and creator details of the asset. Current marketplaces could filter by embedded artwork provenance, possibly resulting in a premium on that work.

In today’s NFT world, we could take one of these beautiful photographs by Sara Lewkowicz and list them for sale on any number of open NFT marketplaces. There would be nothing in the NFT or the photo itself that would show that Sara, not us, was the real photographer.

But we recently partnered with Sara to add tamper-evident metadata to some of her work. If Sara ever chose to list this work for sale — via an NFT, a stock photography service, or a direct negotiation — the purchaser could be assured she created it by verifying this metadata.

Later, with deeper integration into the minting process itself, the connection between a given NFT and its provenance-enhanced artwork could be strengthened by enabling a two-way linkage. The artwork provenance attached to the asset could be updated each time the work changes hands and the embedded data can contain key information such as the cryptocurrency wallet address, the transaction amount, and the time of the transfer.

We’ve long been guided by the true north that giving artists credit for their work results in more opportunities for them. Here we have a real solution for the NFT ecosystem: identifying the artist behind the art.

Are you working in this area? We'd love to hear from you. Email us.

CAI’s Andy Parsons on Episode 51 of Infotagion with Damian Collins MP

British podcast Infotagion dives into disinformation, media literacy, and how the CAI is part of the solution.

Andy Parsons, Director of the Content Authenticity Initiative, was a guest on the most recent episode of the Infotagion Podcast with Damian Collins MP, alongside Anna-Sophie Harling from NewsGuard. The conversation focused on how technology is part of the solution for tackling disinformation in the long term.

Listen to learn how CAI contributes to the debate on how to tackle disinformation and fake news on Episode 51 of Infotagion.

CAI Member Serelay Launches First Publicly Available Software Apps with CAI Technology Enabled

UK-based Serelay apps join the CAI constellation of end-to-end, interoperable implementations.

By the CAI team

Our Member Community

Since rolling out the Content Authenticity Initiative membership program in December, we’ve welcomed a broad range of participants, from publishers to advocacy groups to photojournalists to hardware manufacturers to software companies. We’ll be highlighting activity from within our member community as part of our core mission to support prototype development with real-world applications of secure provenance details at scale. Joining the previously supported hardware prototype from Qualcomm and Truepic, and the Starling and Reuters 78 Days project, we are pleased to announce our first software prototype from the CAI member community from Oxford, UK-based Serelay Technologies.

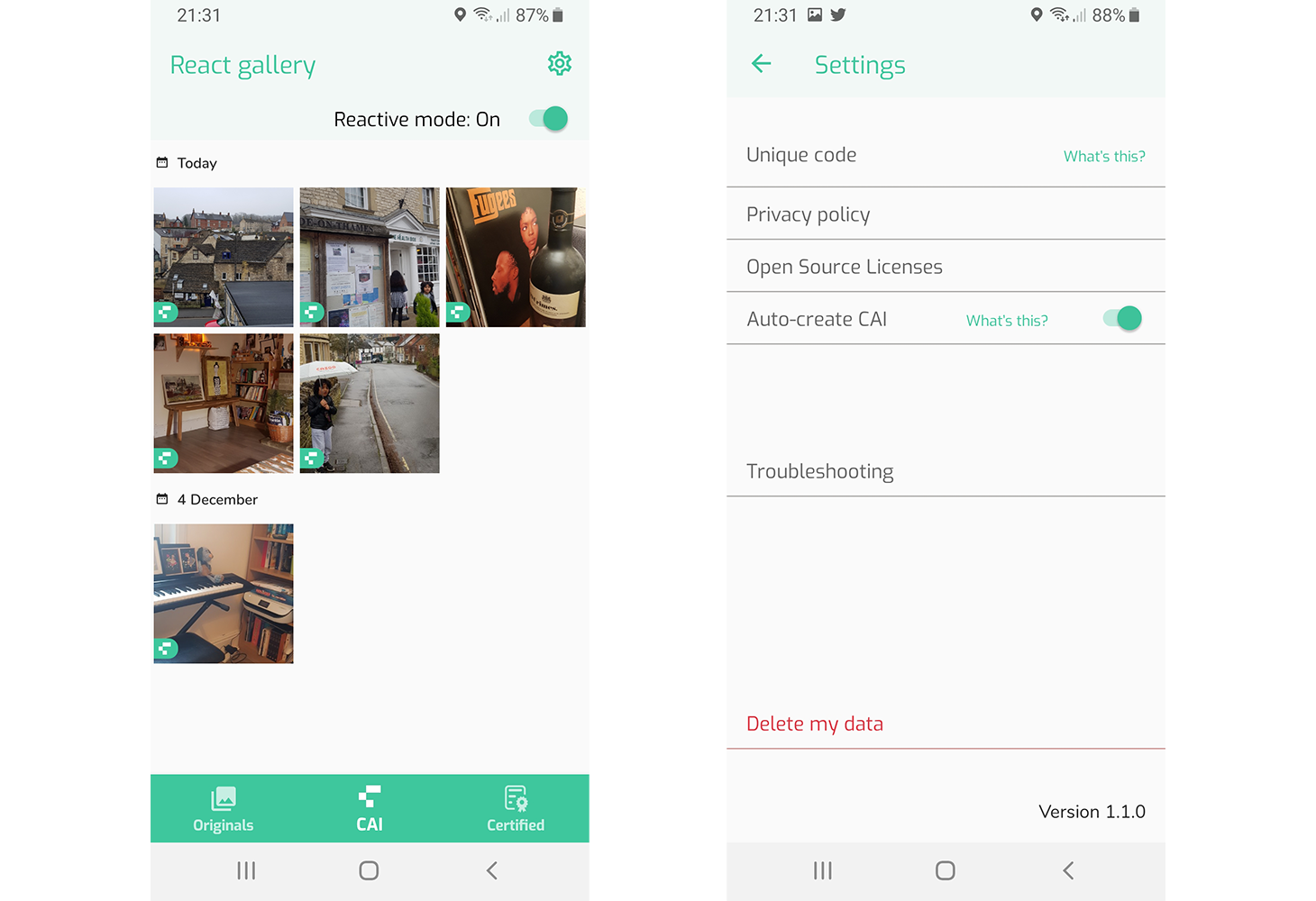

The First CAI Software Prototype

Serelay has announced that their two core imaging products, Idem and React, now incorporate CAI technology into their Trusted Media Capture software. Idem allows secure and verifiable capture of images through the Serelay camera, and in order to preserve user privacy does not require registration. React allows the same, but through their smartphone’s native camera. Serelay’s SDK implementation of these apps, also available now, offers this functionality to third-party developers.

Through their implementation of CAI technology, Serelay uses Trusted Media Capture software to record hundreds of anonymized data points, such as location, wifi networks, or cell tower readings, at the moment an image is captured so the image can later be authenticated. A side-by-side comparison shows even one pixel or video frame that has been altered. Through sharing provenance information for both images and videos, Serelay’s apps join the CAI constellation of end-to-end, interoperable implementations.

A side-by-side comparison of images from Serelay react as seen in the CAI Verify tool.

What’s Next

We applaud the extensive work done by the Serelay team to bring the early CAI specifications to life in Idem and React. This noteworthy achievement is truly pioneering and we look forward to sharing more about Serelay’s work and the work of other teams in the CAI community. For a deeper dive into another of these, please join us for our first members-only event on Wednesday, March 31 as we speak with co-author of our foundational white paper, Jonathan Dotan about the 78 Days project. He’ll be joined by Reuters senior photojournalist Lucy Nicholson in conversation with our head of education and advocacy, Santiago Lyon. You can find out more information about the event, A New Model for Trust in Photojournalism, and join us as a CAI member here. As a member, you’ll find out first about exclusive member events, get early access to our SDK, plus prototype support from the CAI team.

Adobe co-founds the Coalition for Content Provenance and Authenticity (C2PA) standards organization

Along with Microsoft, Truepic, Arm, Intel and the BBC, Adobe has founded the Coalition for Content Provenance and Authenticity (C2PA) as the organization for open specification development of content provenance standards.

By Andy Parsons, Content Authenticity Initiative Director

Today I’m proud to announce a critically important milestone for the Content Authenticity Initiative. Along with Microsoft, Truepic, Arm, Intel and the BBC, Adobe has founded the Coalition for Content Provenance and Authenticity (C2PA). The C2PA is the organization that will advance the work of open specification development toward broad adoption of content provenance standards.

When we started the CAI, we said it would be developed openly, collaboratively with many partners, and that it would embrace diverse areas of expertise and viewpoints. As a formal coalition for standards development, the C2PA adopts this mission under the auspices of the Linux Foundation via the Joint Development Foundation (JDF) project structure. Work on CAI specifications done to date will move to the C2PA and will join work from Microsoft and BBC-backed Project Origin, which has focused on provenance for publisher workflows. It is a mutually governed consortium created to accelerate the pursuit of pragmatic, adoptable standards for digital provenance, serving creators, editors, publishers, media platforms, and consumers. Its charter prioritizes careful, thoughtful architecture for a future of verifiable integrity in media.

The founding members of the C2PA and those that join the Coalition after today share a commitment to creating standards that bolster the public’s understanding of what is real and what is not. We aim to enable cryptographically verifiable facts about content to engender trust across all the surfaces through which we consume content.

At the intersection of media, journalism, creativity and technology lies a core, essential requirement of transparency. It enables those experiencing content to know what that content is and where it came from. Without transparency, the continued increase in misinformation we have witnessed in recent months is inevitable. But, armed with secure, verifiable provenance data, fact-checkers, human rights defenders, and consumers alike will be able to make informed decisions about what to trust online. We, along with the other founding members of the C2PA, aspire to rebuild the public’s trust in online content through broad adoption of this idea.

As the C2PA undertakes the work of creating the technical foundation for a future of transparency, the CAI will continue to foster a broad, expansive, and diverse community of stakeholders. We will do this through three key areas of focus:

With advocacy and education, we will promote the core idea of digital provenance and bring feedback to standardization efforts. With events, newsletters, and a growing community we will celebrate progress and embrace challenges in the ever-evolving media and creative ecosystems, together.

By prototyping implementations with partners in this community, we will vet the foundational technical concepts in real-world contexts at scale. We recognize a clear responsibility to not only specify and promote the core tenets of provenance, but to build them using running code and approachable user experiences.

And, with Adobe’s unequalled community of creative professionals, from photojournalists to artists and creators of groundbreaking content, we will deliver CAI creation experiences across the Creative Cloud, ensuring interoperability with others building devices, applications, and platforms, all using the emerging standards of the C2PA.

Underlying the work done by Adobe on the CAI is a strong commitment to openness—open source, open specifications, open security review, and above all, open participation and a welcoming community. This commitment extends Adobe’s long-standing focus on open standards development, which has produced, among others, XMP, DNG, and PDF. It is part of our DNA, and it is a core part of everything we do in the CAI.

2020 was a year of discovery and foundation building for the CAI. The team built on the momentum generated by the CAI white paper by forging partnerships for future collaboration, which culminated in December’s launch with Truepic and Qualcomm, demonstrating the pragmatic interoperability of cameras, editing tools, and publishing processes, all centered around the CAI provenance data specification. This marked the first full lifecycle demonstration of the technology and propelled the CAI into its next phase of progress.

Today’s founding of the C2PA represents an important step forward. However, the founding members represent but a small number of the organizations and individuals whose work will contribute to the path forward. I encourage standards-focused technologists to learn more about joining the C2PA, and I invite everyone reading this to be part of the journey ahead by joining the CAI.

CAI spotlight: ‘78 Days,’ a verified photo archive from Starling and Reuters

A new photojournalism project created with cryptographic methods and decentralized protocols for secure capture, storage, and verification of images uses CAI specifications and tools.

By Andrew Kaback, Content Authenticity Initiative Product Manager

You’ve likely seen this scenario play out online: Photojournalists who work diligently to build a reputation for trustworthy and accurate reporting find their images taken out of context, or even edited by others. So how can newsrooms, editors, photojournalists, and publishers address this? Project Starling, jointly developed by the USC Shoah Foundation and Stanford University’s Department of Electrical Engineering, presents an approach using cryptographic methods and decentralized protocols to securely capture, store, and verify accuracy of images. They partnered with Reuters and blockchain data integrity startup Numbers to apply CAI attribution standards to the resulting photojournalism project 78 Days. Today, Starling is launching their work as a prototype archive that exemplifies tamper-evident transparency around the capture and editing process of photojournalism.

How they did it

Starling co-founder Jonathan Dotan is an early collaborator and champion of CAI, notably as a co-author of the foundational white paper we published last August. Through early access to CAI specifications and tooling, Dotan and the Starling team implemented our technology so that 78 consecutive days of photographs produced by Reuters photojournalists were created with cryptographically secure metadata. Their goal was to use and evaluate tools for secure capture, storage, and verification of images. See the results and more about the image authentication technology used in the Starling prototype archive using CAI attribution standards here.

How you can see it

The 78 Days project uses prototype software developed by the CAI team at Adobe to display relevant data accompanying an image. Visitors to the 78 Days project website will see a clickable information icon in the upper right corner of images on the site. If you scroll to the bottom of the preview box and click the View More button, you’ll have access to Verify, a new CAI tool that anyone can use to inspect images and compare previous versions or other pictures used in their creation.

Why it matters

Applying CAI technology to images provides consumers with the information they need to make better, more informed decisions about what to trust online. 78 Days definitively demonstrates how news organizations can apply secure provenance to combat misinformation in newsrooms and beyond. We look forward to continuing to work with pioneering organizations across hardware, software, publishing, and social media to push forward the core CAI tenet of restoring trust in media through transparency.

Welcoming Santiago Lyon

The venerable photojournalist and industry executive joins the CAI team as Head of Advocacy and Education.

By Andy Parsons, Content Authenticity Initiative Director

Today I am delighted to welcome Santiago Lyon to the CAI team as Head of Advocacy and Education.

As we enter year two of the Initiative, Adobe’s commitment to responsible use of powerful creative tools has never been stronger, and along with it comes our mission to create technology that restores integrity to content of all kinds.

With more than 30 years of experience as a photojournalist and industry executive (notably as vice president and director of photography of the Associated Press), Santiago’s career has focused on truth, integrity and empowering photographers to tell important, compelling stories. He has earned many awards for his work on conflict, including two World Press Photo prizes and the Bayeux prize for war photography.

Santiago brings a truly unique perspective to the CAI, based on a lifetime of dedication to media integrity. In Content Authenticity, he sees the potential to bring verifiable provenance to an industry increasingly challenged with inauthentic content and disinformation.

Beyond our work on standards, technology and partnerships, an essential tenet of the CAI is to build awareness through pragmatic education of creators who will use CAI tools in their work. With the leadership Santiago brings to the team, we look forward to great progress both teaching and learning from the photojournalism community.

This is a good day for the CAI. Welcome, Santiago!

Restoring Trust in the Age of (Dis)Information: The CAI Year in Review 2020

During our final event of the year, we announced a 2020 capstone achievement: the first-ever photos captured with the end-to-end system for image provenance developed with CAI standards.

By Andy Parsons, Content Authenticity Initiative Director

Adobe’s Content Authenticity Initiative (CAI) team this week hosted our final virtual event of the year: Restoring Trust in the Age of (Dis)Information. During the event, we announced a 2020 capstone achievement: the first-ever photos captured with the end-to-end system for image provenance developed with CAI standards. This is a critical step toward combatting disinformation and is the result of a close collaboration between Adobe, Qualcomm and Truepic.

The CAI is designing components and drafting industry standard specifications for a simple, extensible and trustworthy media provenance solution. This is being done in close partnership with an increasing number of collaborators, including Twitter and The New York Times. With inauthentic media shared online at an unmanageable pace, the CAI is working to create these standards at scale so that consumers and publishers can easily determine whether to trust visual content.

In just over a year since its inception, the CAI has achieved three critical goals:

First, we have set the groundwork for standards via a cross-industry specification effort, embracing diverse viewpoints and a broad set of stakeholders. In August, the CAI white paper was released with co-authors from Microsoft, BBC, The New York Times, Twitter, WITNESS and others. The paper lays out the foundation and guiding principles for a robust but simple architecture that provides strong trust assurances for expressing facts about any type of content, starting with images.

Second, we introduced CAI fundamentals into Photoshop, an Adobe Creative Cloud flagship product. Thanks to Adobe’s enormous reach to all manner of creative professionals who depend on and use Creative Cloud in their work daily, we have the ability to test and hone the CAI user experience to make it simple, impactful, and important to millions of photographers, video producers and visual artists. We’ve started with the CAI Photoshop Private Beta, announced at MAX this year, and 2021 will see further CAI expansion into additional apps and use cases.

Last, we have built strong partnerships for prototyping and interoperability. Our goal is to ensure easy and wide adoption of the CAI standards. In order to achieve this at scale, it is imperative that we invest in partnerships across hardware, software, publishing and social media platforms to further the reach and impact of the CAI through functional prototypes. It is this pursuit that has culminated in a major announcement this week.

Bringing key partners together for the Trust in the Age of (Dis)Information Event

Our December 8th event, Restoring Trust in the Age of (Dis)Information, marked the first pragmatic end-to-end implementation of the CAI standard from image capture, through Photoshop editing and publishing. This was possible through the work of CAI collaborators Qualcomm and Truepic. Together we achieved the first secure hardware implementation of the CAI standard, which will soon become available to consumers. This technology affixes photos taken in “secure mode” on smartphone devices with Qualcomm’s Snapdragon 888 platform with key datafacts from the moment of capture. We gave Sara Naomi Lewkowicz, an award-winning photojournalist early access to a prototype with this technology enabled. You can see the resulting images — the first-ever photos taken with a CAI-enabled device — and read more in our first case study.

We took the opportunity to assemble a group of industry experts, Sara included, to reflect on the problem of inauthentic media and the role of the CAI in the ultimate restoration of trust.

Nina Schick, author of the book Deepfakes: The Coming Infocalypse, moderated a conversation between panelists “either in or at the nexus of technology, journalism and society.” We shared new research released by Adobe around perception of disinformation in media by consumers and creative professionals and mentioned work to come in 2021, including expanding CAI standards to video content.

From a publisher perspective, Marc Lavallee (New York Times R&D) spoke about building systems to allow for information to be understood as accurate as it travels around the internet — this may complicate their work but is central to the mission of a news organization like the Times.

Sherif Hanna (Truepic VP of R&D) reminded us of what’s at play: society’s shared sense of visual reality, and how important it is that both public and private sectors work together to create open standards.

From a social media perspective, Fabiana Meira (Misleading Information Product Manager at Twitter) weighed in on the public trust and safety aspect of protecting information as it’s shared.

We’re just getting started

Looking ahead, we’re now welcoming applications for CAI membership which will allow early access to CAI specifications and source code, invitations to future events, and collaboration with the CAI team on prototypes. In the new year, we will continue developing standards and source code in the open. This project is only possible with transparency, open development and deep collaboration among a broad set of diverse stakeholders. We hope you’ll join us.

Leaders discuss how industry and government are working to combat deepfakes and inauthentic content

Adobe participated in a virtual event hosted by principal trade association for the software and digital content industry, the Software & Information Industry Association.

Adobe participated in a virtual event hosted by principal trade association for the software and digital content industry, the Software & Information Industry Association.

Dana Rao and other leaders discussed the growing threat of disinformation and the efforts being done across industry and government organizations to help restore trust in online content. Andy Parsons, Director of Content Authenticity Initiative (CAI), also provided a demonstration of Adobe’s latest prototype CAI attribution tool. Public sector and industry speakers were impressed with the demo and excited about the work being done by the CAI. A blog recapping the event and the event recording can be found here.

The Content Authenticity Initiative unveils content attribution tool within Photoshop and Behance

Adobe believes in the power of creativity — for all — to make our world a more beautiful, interesting place. We also believe that creativity is even more powerful when combined with transparency and trust.

By Will Allen

Adobe believes in the power of creativity — for all — to make our world a more beautiful, interesting place. We also believe that creativity is even more powerful when combined with transparency and trust. One year ago at Adobe MAX, we launched the Content Authenticity Initiative (CAI) to address the challenges of deepfakes and other deceptively manipulated content. Adobe’s heritage is built on trustworthy creative solutions, and the CAI’s mission is to increase trust and transparency online with an industry-wide attribution framework that empowers creatives and consumers alike.

Today, we are unveiling a preview of our attribution tool in Adobe Creative Cloud. This feature will be available to select customers in a beta release of Photoshop and Behance in the coming weeks. The tool is built using an early version of the open standard that will provide a secure layer of tamper-evident attribution data to photos, including the author’s name, location and edit history. This will help consumers better understand the content they view online and give them greater confidence to evaluate its authenticity.

This is a significant milestone for the Content Authenticity Initiative that follows countless hours of technical development and information sharing with our collaborators including The New York Times Company, Twitter, Inc., Microsoft, BBC, Qualcomm Technologies, Inc., Truepic, WITNESS, CBC and many others. You can read more about our approach in the technical white paper we released in August. Collaborating with leaders across a variety of industries will help to create an industry-wide attribution framework, making it easier on consumers and creatives alike to use the tool.

In addition to helping us understand what to trust online, attribution matters for another critical reason: Providing credit to creators for their work. Think of it as a simple equation: Exposure (for your creative work) plus attribution (so people know who created it) equals opportunity (for more collaborations or jobs). Now, with a tamper-evident way to seamlessly attach your name to your creative work, you can go viral and you’ll still get credit.

We believe attribution will create a virtuous cycle. The more creators distribute content with proper attribution, the more consumers will expect and use that information to make judgement calls, thus minimizing the influence of bad actors and deceptive content.

Ultimately, a holistic solution that includes attribution, detection and education to provide a common and shared understanding of objective facts is essential to help us make more thoughtful decisions when consuming media. Today is a huge leap forward for the CAI, but this is just the beginning.

For more information, visit https://contentauthenticity.org. If you have thoughts or suggestions, please let us know by emailing contentauthenticity@adobe.com.

CAI Achieves Milestone: White Paper Sets the Standard for Content Attribution

Today marks a significant milestone for the Content Authenticity Initiative as we publish our white paper, “Setting the Standard for Content Attribution”.

By Andy Parsons, Director, Content Authenticity Initiative

Today marks a significant milestone for the Content Authenticity Initiative (“CAI”) as we publish our white paper, “Setting the Standard for Content Attribution”. It addresses the mounting challenges of inauthentic media and our proposal for an industry-standard content attribution solution that will enable creators to securely attach their identity and other information to their work before they share it with the world.

Addressing a Growing Problem

The need to combat intentionally deceptive content has never been more urgent. According to a 2019 study by the Pew Research Center, nearly two-thirds of Americans say that synthetic or altered images and videos create confusion about the facts of current issues and events. In recent months, social media sites and news organizations have begun applying “manipulated media” tags to doctored images and videos that are meant to mislead or stoke division among the public. The challenge is only growing as the volume of inauthentic media increases.

Efforts to address content authenticity have largely focused on using AI to detect deep fakes and other altered media. And, that effort is important. But shouldn’t there also be a transparent way to inform the public who created the original photos and videos, and how these assets were changed over time? Isn’t it equally important that creative professionals and photojournalists receive credit for their work?

That’s exactly the goal of the CAI. As explained in our white paper, we propose adding a layer of secure provenance which expresses relevant facts about how media is altered from the moment of creation to the moment of audience experience. This technique will eliminate much of the uncertainty currently facing editors and authors of creative content and provide greater transparency into the origins of online media for consumers.

How Attribution Works

For the last nine months, the CAI has been hard at work with a diverse interdisciplinary group of collaborators, including industry leaders from technology, media, academia, advocacy groups, government and think tanks. Our mission is to develop an open, extensible attribution solution that can be implemented across devices, software, publishing and media platforms.

Today, attribution information is typically embedded in the metadata of digital assets, making it corruptible and untrustworthy. Even when careful steps are taken to verify metadata in production workflows, most assets appear online without the information intact, leaving content moderators and fact-checkers to reconstruct the provenance and context of content through imperfect methods. CAI data, in contrast, is cryptographically sealed and verifiable by an individual or organization along the path from creation to consumption.

Our Approach

We believe the CAI solution described in the paper achieves a critical balance of security, resilience, privacy and interoperability. At its core, CAI attribution introduces constructs called assertions and claims. Simply put, assertions capture the who, what, and how of asset creation and modification while claims add a layer of cryptographic verifiability and trust. In combination with a growing set of supported file formats, and the fundamental principle that CAI data can be stored in files or linked in the cloud, assertions and claims can become the lingua franca of secured metadata for systems from mobile devices to social media.

At the same time, we pay careful attention to situations where full transparency is not prudent. For cases where anonymity is essential and revealing too much detail can cause harm, the CAI system supports intentional use through opt-in parameters and redaction of prior assertions when warranted. Critically, embracing this functionality allows users to share only the details they wish to share without compromising the ability to trace provenance downstream.

Our white paper also outlines some CAI-enabled use cases, which demonstrate the essential characteristics of the attribution system we are designing. We imagine the system in the hands of creative professionals, photojournalists and human rights activists who may benefit from the promising potential CAI offers. There are many more scenarios to explore and we intend to do that through continued collaboration with stakeholders.

With widespread adoption of CAI’s attribution specifications, we hope to significantly increase transparency in online media, provide consumers with a way to decide who and what to trust and create an ecosystem that rewards impactful, creative work.

The Collaborators

We’ve reached this goal through the generous participation of the co-authors and collaborators who represent a diverse set of viewpoints. I would like to acknowledge the time and thought contributed by the CAI collaborators. Without their expertise and vision this milestone could not have been reached. The full list of authors and collaborators is below.

Authors:

Andy Parsons (Adobe)

Leonard Rosenthol (Adobe)

Eric Scouten (Adobe)

Jatin Aythora (The British Broadcasting Corporation)

Bruce MacCormack (CBC/Radio-Canada)

Paul England (Microsoft Corporation)

Marc Levallee (The New York Times Company)

Jonathan Dotan (Stanford Center for Blockchain Research)

Sherif Hanna (Truepic)

Hany Farid (University of California, Berkeley)

Sam Gregory (WITNESS)

Contributors:

Will Allen (Adobe)

Pia Blumenthal (Adobe)

John Collomosse (Adobe)

Oliver Goldman (Adobe)

Andrew Kaback (Adobe)

Gavin Peacock (Adobe)

Charlie Halford (The British Broadcasting Corporation)

Scott Lowenstein (The New York Times Company)

Thomas Zeng (Truepic)

Fabiana Meira Pires De Azevedo (Twitter)

Corin Faife (WITNESS)

Our Work Continues

Today’s white paper is the result of many hours of problem definition, system design, use case exploration and vigorous debate with multiple stakeholders groups culminating in the ideas expressed in the document. At the same time, we also humbly embrace the magnitude of our mission in knowing that this is only a first step; we’ve arrived at the starting line of a long journey. As we share our work, we are advancing on the path toward an industry standard for digital content attribution. And with this first step, we look optimistically toward a future with more trust and transparency in media.

Please follow our work and reach out to be involved.

Congressional leaders, industry and media experts discuss actions to combat inauthentic content

Adobe and The New York Times participated in a virtual event hosted by global research organization software.org.

Adobe and The New York Times participated in a virtual event hosted by global research organization software.org.

Dana Rao and other leaders discussed legislative and industry efforts addressing the impact of misinformation, including CAI momentum. Across the board, public sector and industry speakers were enthusiastic and optimistic about the work being done by the CAI. A blog recapping the event and the event recording can be found here.

Adobe Reinforces Commitment to Content Authenticity

When you see a photo or video on social media, on the evening news, or through something your friend shared, how do you know you can trust it? How can you be sure the content hasn’t been tampered with to sway your thinking?

By Will Allen, VP Product, Adobe

When you see a photo or video on social media, on the evening news, or through something your friend shared, how do you know you can trust it? How can you be sure the content hasn’t been tampered with to sway your thinking?

Every day we are faced with split-second decisions to believe, or not believe, what we’re seeing. For a better part of a year, it’s been the goal of Adobe’s Content Authenticity Initiative, to build a tool which helps consumers to decide who and what to trust.

Since we announced the program at Adobe MAX last year, we’ve been working closely with the New York Times, Twitter, and dozens of other companies, nonprofit groups, and policy experts. Our goal is to build a scalable, open, privacy-conscious solution shared by publishers, platforms, creative tool makers, and content creators.

While there is a long road ahead, today marks an important milestone in this journey as we introduce our first technical white paper that outlines our approach. The attribution white paper is currently being refined and reviewed by our partners and stakeholders.

Our Mission

A quick word on what we’re trying to achieve and why I think this approach will ultimately help creators and consumers alike.

Imagine this scenario: one individual edits a photo or video to better capture what they see as the truth of a situation; yet to another observer, that same edited content might obscure what they believe actually happened. This is a common predicament, and there is a reason these subjective interpretations can diverge so widely: namely, there is no readily available shared objective understanding of what happened to that piece of media.

Who created the photo/video, where it was taken, and how it was edited are all called into question. Currently, when someone shares their creation, there is no viable or standard solution for attaching their name to the content, or outlining the edits they made. The truth, in that scenario, doesn’t have a foundation.

We believe that providing a common and shared understanding of facts is essential for consumers as they aim to make thoughtful decisions when consuming media.

The White Paper

Enter the Content Authenticity Initiative. Since the introduction of the program last fall, we’ve diligently worked on our technical approach. Our white paper provides insight into how we’re building the system that will enable creators to permanently attach attribution data to their asset as they share it with the world.

Our privacy-conscious approach means we’re giving full control to the creator, who can choose whether they’d like to attach their name; show the entire edit history; or share the location where the photo or video was shot. This metadata is immutable, securely stored, and available for anyone to read.

The technical design put forward in the white paper supports 4 principles we consider essential:

Privacy. The system has flexibility around what identifying data is stored and how data can be redacted in cases privacy concerns may arise.

Interoperability. The components are straightforward to implement and build in interoperability via clear data models and protocols.

Fit with existing workflows. We recognize the challenges of widespread adoption and begin to address them by ensuring that user experience consequences of incorporating CAI components are minimal, clear and unsurprising to end users.

Compatible with cloud and file-based uses. To reach adoption and standardization, the system embraces file-based workflows and cloud-enabled ones with equal fidelity so that applications on desktop, web, mobile and other platforms can enjoy seamless integrations.

Why This Matters

Now, why should this concern artists and creatives, and not just photojournalists?

I’ve been building and leading Behance - our community for creatives -- for many years. Behance now has more than 22 million members, and close to a million unique visitors per day, and one theme I hear constantly is about the need for creatives to get credit for their work.

This simple equation has always been one of my guiding principles: exposure (for your work) plus attribution (so folks know who created it) equals opportunity. But if there is no consistent way to ensure you can get attribution, finding opportunity is a lot more challenging.

And we all see this every day -- how often does a piece of creative work go viral completely detached publicly from the person who first made it?

Attribution Tool

The attribution solution we are architecting will allow any creative -- a photographer, illustrator, digital artist -- to attach their name to, and thereby get credit for, their work as it travels across the internet.

We’re building this solution as an open standard so that platforms, news organizations, and individuals alike will be able to read this metadata and identify the original creator, taking much of the guesswork out of authenticity. And, more credit for the artist begets more opportunities for jobs and collaboration.

I know we will face a myriad of challenges as we build this with our partners and the broader community, but we’re just getting started. The best part of this process is being able to build this system together, in the open, with an amazing group of companies and experts. If we’re missing something, please let us know. And stay tuned for more.

CAI represented in the EU40 discussion

Adobe participates at the EU40 discussion “The Era of Misinformation” about the CAI approach. Kate Brightwell discusses the long-term benefits of an attribution-based system at an event to address the dangers of misinformation, particularly during the COVID19 pandemic.

Adobe participates at the EU40 discussion “The Era of Misinformation” about the CAI approach. Kate Brightwell discusses the long-term benefits of an attribution-based system at an event to address the dangers of misinformation, particularly during the COVID19 pandemic.

CAI at Social Media Week: Paving the Way Toward Trusting Media Again

CAI collaborators Andy Parsons (Adobe), Sam Gregory (Witness) and Jeff McGregor (Truepic) speak at Social Media Week on content provenance, the challenges of restoring trust and how new technologies will form the foundation for transparency in media.

CAI collaborators Andy Parsons (Adobe), Sam Gregory (Witness) and Jeff McGregor (Truepic) speak at Social Media Week on content provenance, the challenges of restoring trust and how new technologies will form the foundation for transparency in media.

CAI white paper collaborators come together for the first working meeting

CAI gathered for the first meeting towards the publication of a white paper outlining our approach to content attribution. Collaborators included representatives from social media, software, media, academia and non-profit industries as we addressed solutions for the entire landscape of digital media.

CAI gathered for the first meeting towards the publication of a white paper outlining our approach to content attribution. Collaborators included representatives from social media, software, media, academia and non-profit industries as we addressed solutions for the entire landscape of digital media.